Authors: Zhangyu Wang, Lantian Xu, Zhifeng Kong, Weilong Wang, Xuyu Peng, Enyang Zheng

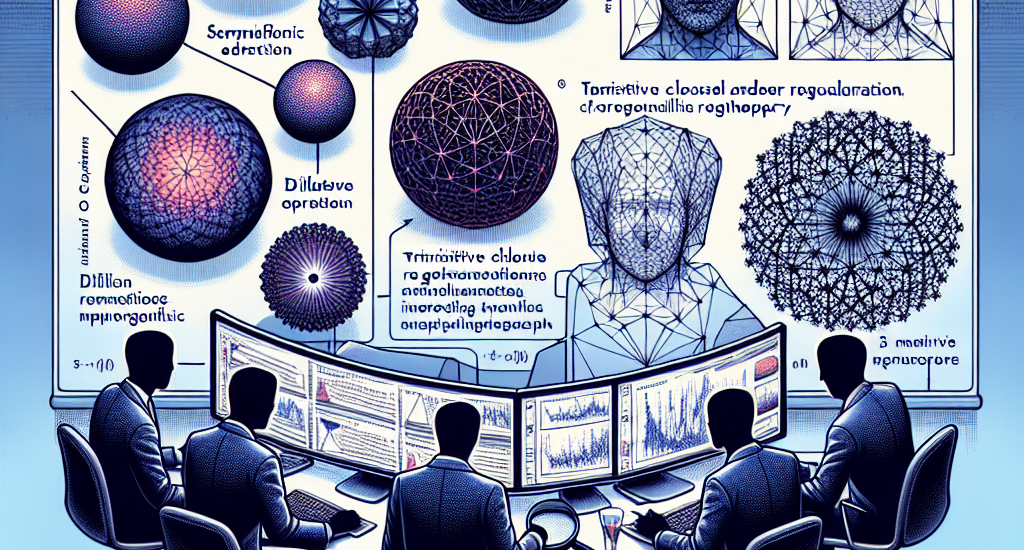

Abstract: Hyperbolic embeddings are a class of representation learning methods that

offer competitive performances when data can be abstracted as a tree-like

graph. However, in practice, learning hyperbolic embeddings of hierarchical

data is difficult due to the different geometry between hyperbolic space and

the Euclidean space. To address such difficulties, we first categorize three

kinds of illness that harm the performance of the embeddings. Then, we develop

a geometry-aware algorithm using a dilation operation and a transitive closure

regularization to tackle these illnesses. We empirically validate these

techniques and present a theoretical analysis of the mechanism behind the

dilation operation. Experiments on synthetic and real-world datasets reveal

superior performances of our algorithm.

Source: http://arxiv.org/abs/2407.16641v1