Authors: Marius F. R. Juston, William R. Norris, Dustin Nottage, Ahmet Soylemezoglu

Abstract: Deep residual networks (ResNets) have demonstrated outstanding success in

computer vision tasks, attributed to their ability to maintain gradient flow

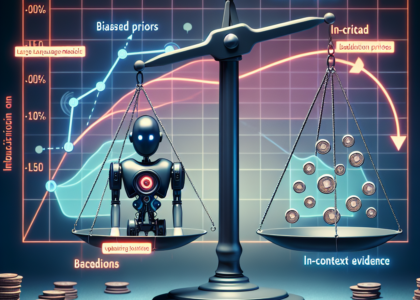

through deep architectures. Simultaneously, controlling the Lipschitz bound in

neural networks has emerged as an essential area of research for enhancing

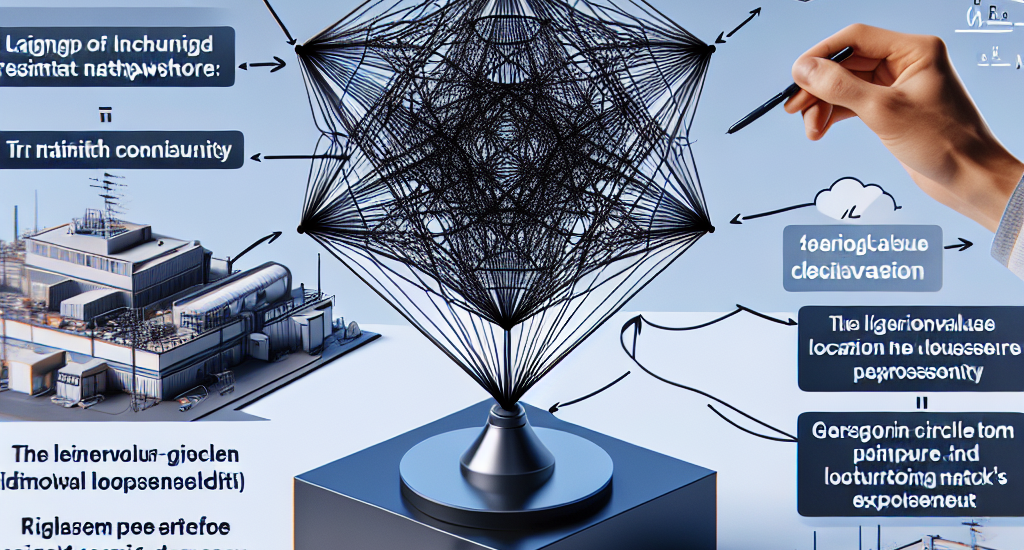

adversarial robustness and network certifiability. This paper uses a rigorous

approach to design $\mathcal{L}$-Lipschitz deep residual networks using a

Linear Matrix Inequality (LMI) framework. The ResNet architecture was

reformulated as a pseudo-tri-diagonal LMI with off-diagonal elements and

derived closed-form constraints on network parameters to ensure

$\mathcal{L}$-Lipschitz continuity. To address the lack of explicit eigenvalue

computations for such matrix structures, the Gershgorin circle theorem was

employed to approximate eigenvalue locations, guaranteeing the LMI’s negative

semi-definiteness. Our contributions include a provable parameterization

methodology for constructing Lipschitz-constrained networks and a compositional

framework for managing recursive systems within hierarchical architectures.

These findings enable robust network designs applicable to adversarial

robustness, certified training, and control systems. However, a limitation was

identified in the Gershgorin-based approximations, which over-constrain the

system, suppressing non-linear dynamics and diminishing the network’s

expressive capacity.

Source: http://arxiv.org/abs/2502.21279v1