Authors: Zhuo Chen, Jiawei Liu, Haotan Liu, Qikai Cheng, Fan Zhang, Wei Lu, Xiaozhong Liu

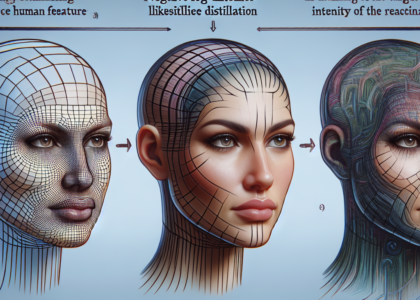

Abstract: Retrieval-Augmented Generation (RAG) is applied to solve hallucination

problems and real-time constraints of large language models, but it also

induces vulnerabilities against retrieval corruption attacks. Existing research

mainly explores the unreliability of RAG in white-box and closed-domain QA

tasks. In this paper, we aim to reveal the vulnerabilities of

Retrieval-Enhanced Generative (RAG) models when faced with black-box attacks

for opinion manipulation. We explore the impact of such attacks on user

cognition and decision-making, providing new insight to enhance the reliability

and security of RAG models. We manipulate the ranking results of the retrieval

model in RAG with instruction and use these results as data to train a

surrogate model. By employing adversarial retrieval attack methods to the

surrogate model, black-box transfer attacks on RAG are further realized.

Experiments conducted on opinion datasets across multiple topics show that the

proposed attack strategy can significantly alter the opinion polarity of the

content generated by RAG. This demonstrates the model’s vulnerability and, more

importantly, reveals the potential negative impact on user cognition and

decision-making, making it easier to mislead users into accepting incorrect or

biased information.

Source: http://arxiv.org/abs/2407.13757v1