Authors: Huawei Lin, Yingjie Lao, Tong Geng, Tan Yu, Weijie Zhao

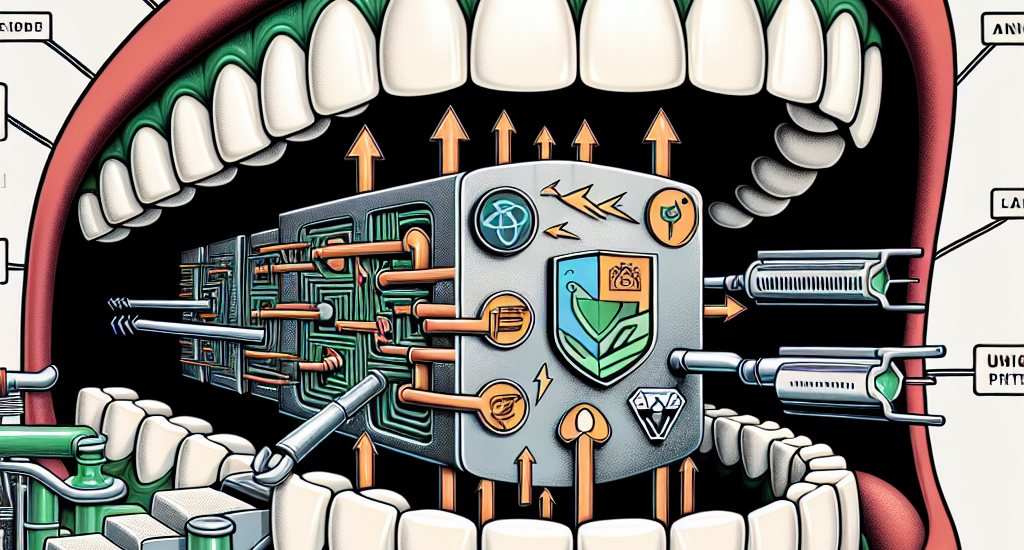

Abstract: Large Language Models (LLMs) are vulnerable to attacks like prompt injection,

backdoor attacks, and adversarial attacks, which manipulate prompts or models

to generate harmful outputs. In this paper, departing from traditional deep

learning attack paradigms, we explore their intrinsic relationship and

collectively term them Prompt Trigger Attacks (PTA). This raises a key

question: Can we determine if a prompt is benign or poisoned? To address this,

we propose UniGuardian, the first unified defense mechanism designed to detect

prompt injection, backdoor attacks, and adversarial attacks in LLMs.

Additionally, we introduce a single-forward strategy to optimize the detection

pipeline, enabling simultaneous attack detection and text generation within a

single forward pass. Our experiments confirm that UniGuardian accurately and

efficiently identifies malicious prompts in LLMs.

Source: http://arxiv.org/abs/2502.13141v1