Authors: Zhengjian Kang, Ye Zhang, Xiaoyu Deng, Xintao Li, Yongzhe Zhang

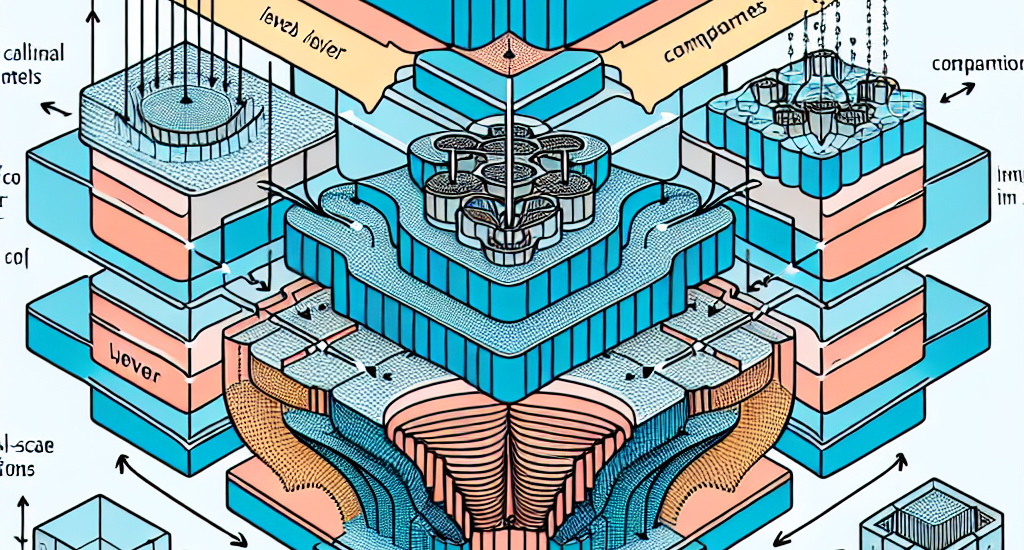

Abstract: This paper presents LP-DETR (Layer-wise Progressive DETR), a novel approach

that enhances DETR-based object detection through multi-scale relation

modeling. Our method introduces learnable spatial relationships between object

queries through a relation-aware self-attention mechanism, which adaptively

learns to balance different scales of relations (local, medium and global)

across decoder layers. This progressive design enables the model to effectively

capture evolving spatial dependencies throughout the detection pipeline.

Extensive experiments on COCO 2017 dataset demonstrate that our method improves

both convergence speed and detection accuracy compared to standard

self-attention module. The proposed method achieves competitive results,

reaching 52.3\% AP with 12 epochs and 52.5\% AP with 24 epochs using ResNet-50

backbone, and further improving to 58.0\% AP with Swin-L backbone. Furthermore,

our analysis reveals an interesting pattern: the model naturally learns to

prioritize local spatial relations in early decoder layers while gradually

shifting attention to broader contexts in deeper layers, providing valuable

insights for future research in object detection.

Source: http://arxiv.org/abs/2502.05147v1