Authors: Junseok Park, Hyeonseo Yang, Min Whoo Lee, Won-Seok Choi, Minsu Lee, Byoung-Tak Zhang

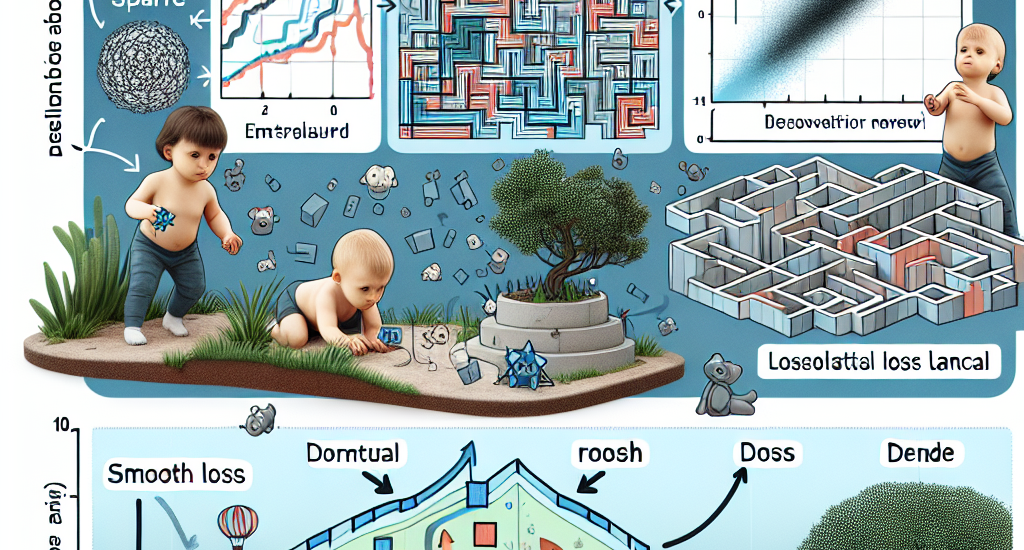

Abstract: Reinforcement learning (RL) agents often face challenges in balancing

exploration and exploitation, particularly in environments where sparse or

dense rewards bias learning. Biological systems, such as human toddlers,

naturally navigate this balance by transitioning from free exploration with

sparse rewards to goal-directed behavior guided by increasingly dense rewards.

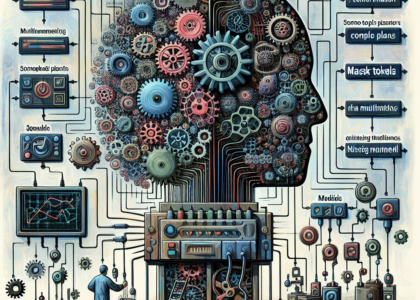

Inspired by this natural progression, we investigate the Toddler-Inspired

Reward Transition in goal-oriented RL tasks. Our study focuses on transitioning

from sparse to potential-based dense (S2D) rewards while preserving optimal

strategies. Through experiments on dynamic robotic arm manipulation and

egocentric 3D navigation tasks, we demonstrate that effective S2D reward

transitions significantly enhance learning performance and sample efficiency.

Additionally, using a Cross-Density Visualizer, we show that S2D transitions

smooth the policy loss landscape, resulting in wider minima that improve

generalization in RL models. In addition, we reinterpret Tolman’s maze

experiments, underscoring the critical role of early free exploratory learning

in the context of S2D rewards.

Source: http://arxiv.org/abs/2501.17842v1