Authors: Xi Wang, Robin Courant, Marc Christie, Vicky Kalogeiton

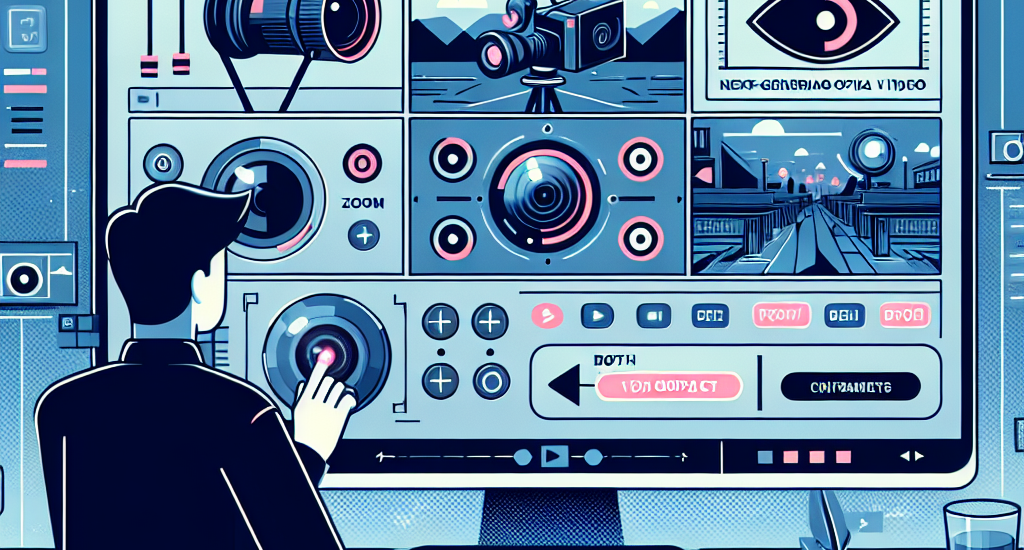

Abstract: Recent advances in text-conditioned video diffusion have greatly improved

video quality. However, these methods offer limited or sometimes no control to

users on camera aspects, including dynamic camera motion, zoom, distorted lens

and focus shifts. These motion and optical aspects are crucial for adding

controllability and cinematic elements to generation frameworks, ultimately

resulting in visual content that draws focus, enhances mood, and guides

emotions according to filmmakers’ controls. In this paper, we aim to close the

gap between controllable video generation and camera optics. To achieve this,

we propose AKiRa (Augmentation Kit on Rays), a novel augmentation framework

that builds and trains a camera adapter with a complex camera model over an

existing video generation backbone. It enables fine-tuned control over camera

motion as well as complex optical parameters (focal length, distortion,

aperture) to achieve cinematic effects such as zoom, fisheye effect, and bokeh.

Extensive experiments demonstrate AKiRa’s effectiveness in combining and

composing camera optics while outperforming all state-of-the-art methods. This

work sets a new landmark in controlled and optically enhanced video generation,

paving the way for future optical video generation methods.

Source: http://arxiv.org/abs/2412.14158v1