Authors: Wenzhao Zheng, Zetian Xia, Yuanhui Huang, Sicheng Zuo, Jie Zhou, Jiwen Lu

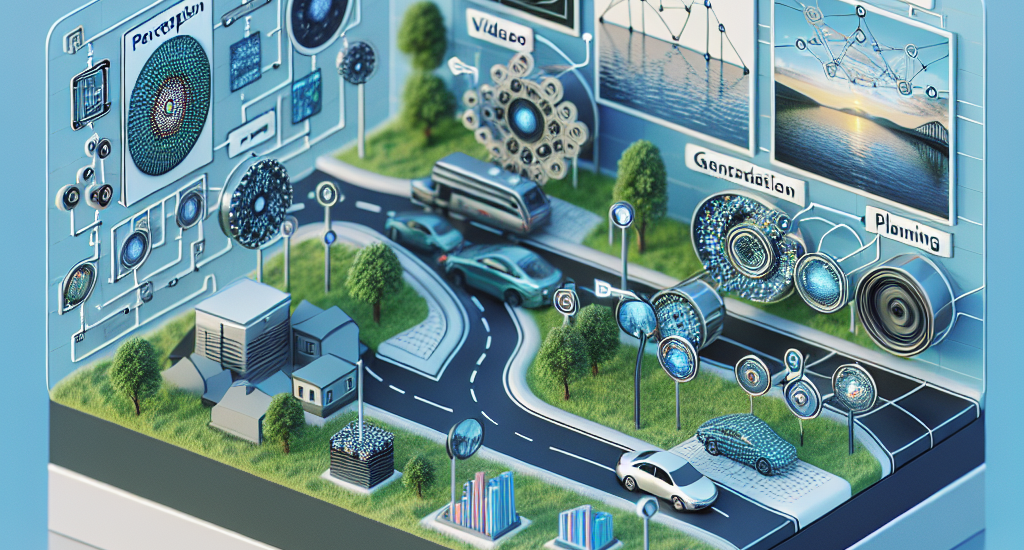

Abstract: End-to-end autonomous driving has received increasing attention due to its

potential to learn from large amounts of data. However, most existing methods

are still open-loop and suffer from weak scalability, lack of high-order

interactions, and inefficient decision-making. In this paper, we explore a

closed-loop framework for autonomous driving and propose a large Driving wOrld

modEl (Doe-1) for unified perception, prediction, and planning. We formulate

autonomous driving as a next-token generation problem and use multi-modal

tokens to accomplish different tasks. Specifically, we use free-form texts

(i.e., scene descriptions) for perception and generate future predictions

directly in the RGB space with image tokens. For planning, we employ a

position-aware tokenizer to effectively encode action into discrete tokens. We

train a multi-modal transformer to autoregressively generate perception,

prediction, and planning tokens in an end-to-end and unified manner.

Experiments on the widely used nuScenes dataset demonstrate the effectiveness

of Doe-1 in various tasks including visual question-answering,

action-conditioned video generation, and motion planning. Code:

https://github.com/wzzheng/Doe.

Source: http://arxiv.org/abs/2412.09627v1