Authors: Yi-Lun Lee, Yi-Hsuan Tsai, Wei-Chen Chiu

Abstract: While large vision-language models (LVLMs) have shown impressive capabilities

in generating plausible responses correlated with input visual contents, they

still suffer from hallucinations, where the generated text inaccurately

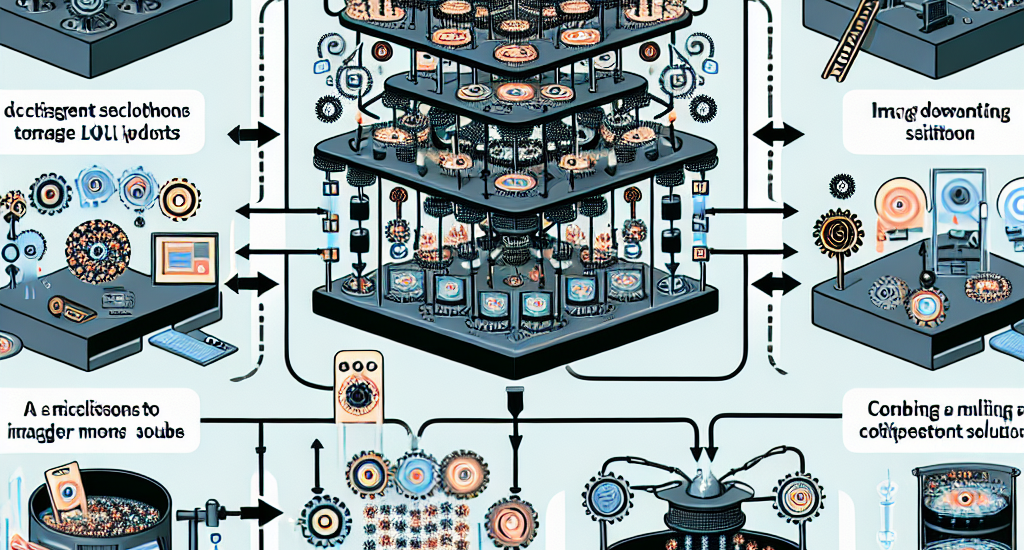

reflects visual contents. To address this, recent approaches apply contrastive

decoding to calibrate the model’s response via contrasting output distributions

with original and visually distorted samples, demonstrating promising

hallucination mitigation in a training-free manner. However, the potential of

changing information in visual inputs is not well-explored, so a deeper

investigation into the behaviors of visual contrastive decoding is of great

interest. In this paper, we first explore various methods for contrastive

decoding to change visual contents, including image downsampling and editing.

Downsampling images reduces the detailed textual information while editing

yields new contents in images, providing new aspects as visual contrastive

samples. To further study benefits by using different contrastive samples, we

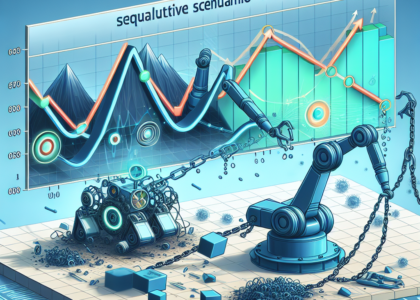

analyze probability-level metrics, including entropy and distribution distance.

Interestingly, the effect of these samples in mitigating hallucinations varies

a lot across LVLMs and benchmarks. Based on our analysis, we propose a simple

yet effective method to combine contrastive samples, offering a practical

solution for applying contrastive decoding across various scenarios. Extensive

experiments are conducted to validate the proposed fusion method among

different benchmarks.

Source: http://arxiv.org/abs/2412.06775v1