Authors: Wenjia Wang, Liang Pan, Zhiyang Dou, Zhouyingcheng Liao, Yuke Lou, Lei Yang, Jingbo Wang, Taku Komura

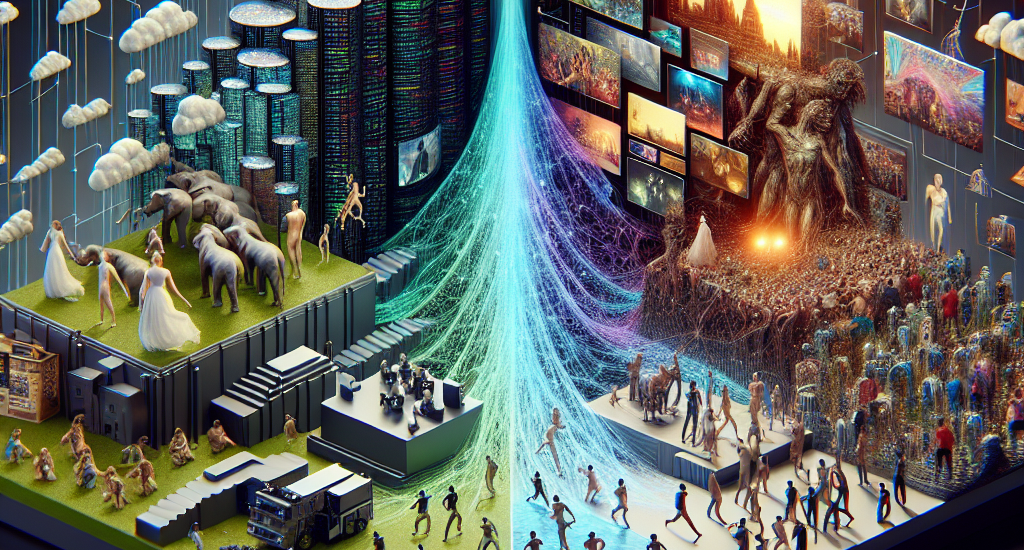

Abstract: Simulating long-term human-scene interaction is a challenging yet fascinating

task. Previous works have not effectively addressed the generation of long-term

human scene interactions with detailed narratives for physics-based animation.

This paper introduces a novel framework for the planning and controlling of

long-horizon physical plausible human-scene interaction. On the one hand, films

and shows with stylish human locomotions or interactions with scenes are

abundantly available on the internet, providing a rich source of data for

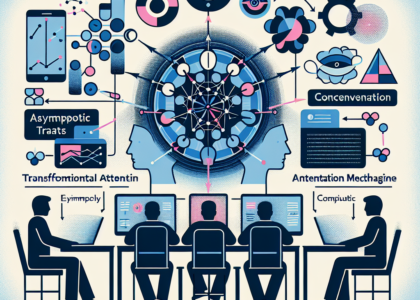

script planning. On the other hand, Large Language Models (LLMs) can understand

and generate logical storylines.

This motivates us to marry the two by using an LLM-based pipeline to extract

scripts from videos, and then employ LLMs to imitate and create new scripts,

capturing complex, time-series human behaviors and interactions with

environments. By leveraging this, we utilize a dual-aware policy that achieves

both language comprehension and scene understanding to guide character motions

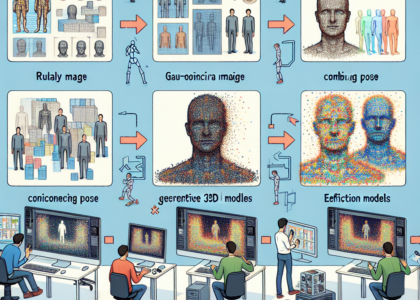

within contextual and spatial constraints. To facilitate training and

evaluation, we contribute a comprehensive planning dataset containing diverse

motion sequences extracted from real-world videos and expand them with large

language models. We also collect and re-annotate motion clips from existing

kinematic datasets to enable our policy learn diverse skills. Extensive

experiments demonstrate the effectiveness of our framework in versatile task

execution and its generalization ability to various scenarios, showing

remarkably enhanced performance compared with existing methods. Our code and

data will be publicly available soon.

Source: http://arxiv.org/abs/2411.19921v1