Authors: Maya Varma, Jean-Benoit Delbrouck, Zhihong Chen, Akshay Chaudhari, Curtis Langlotz

Abstract: Fine-tuned vision-language models (VLMs) often capture spurious correlations

between image features and textual attributes, resulting in degraded zero-shot

performance at test time. Existing approaches for addressing spurious

correlations (i) primarily operate at the global image-level rather than

intervening directly on fine-grained image features and (ii) are predominantly

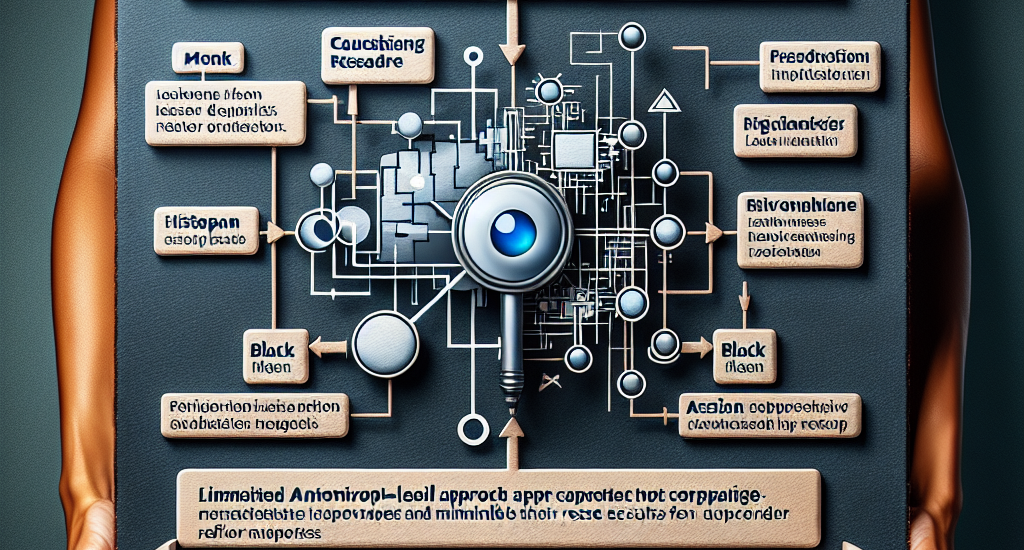

designed for unimodal settings. In this work, we present RaVL, which takes a

fine-grained perspective on VLM robustness by discovering and mitigating

spurious correlations using local image features rather than operating at the

global image level. Given a fine-tuned VLM, RaVL first discovers spurious

correlations by leveraging a region-level clustering approach to identify

precise image features contributing to zero-shot classification errors. Then,

RaVL mitigates the identified spurious correlation with a novel region-aware

loss function that enables the VLM to focus on relevant regions and ignore

spurious relationships during fine-tuning. We evaluate RaVL on 654 VLMs with

various model architectures, data domains, and learned spurious correlations.

Our results show that RaVL accurately discovers (191% improvement over the

closest baseline) and mitigates (8.2% improvement on worst-group image

classification accuracy) spurious correlations. Qualitative evaluations on

general-domain and medical-domain VLMs confirm our findings.

Source: http://arxiv.org/abs/2411.04097v1