Authors: Mohammad Erfan Sadeghi, Arash Fayyazi, Suhas Somashekar, Massoud Pedram

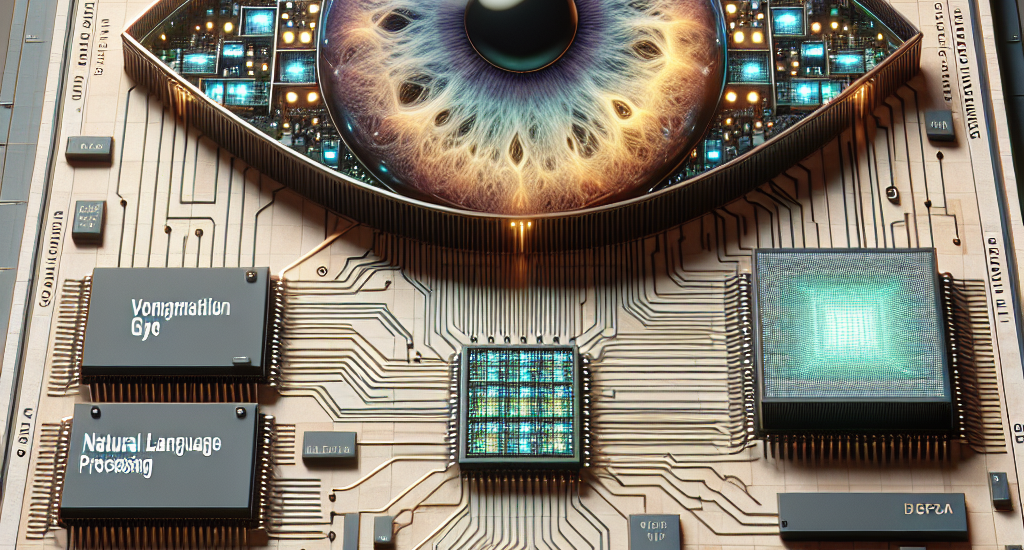

Abstract: Vision Transformers (ViTs) represent a groundbreaking shift in machine

learning approaches to computer vision. Unlike traditional approaches, ViTs

employ the self-attention mechanism, which has been widely used in natural

language processing, to analyze image patches. Despite their advantages in

modeling visual tasks, deploying ViTs on hardware platforms, notably

Field-Programmable Gate Arrays (FPGAs), introduces considerable challenges.

These challenges stem primarily from the non-linear calculations and high

computational and memory demands of ViTs. This paper introduces CHOSEN, a

software-hardware co-design framework to address these challenges and offer an

automated framework for ViT deployment on the FPGAs in order to maximize

performance. Our framework is built upon three fundamental contributions:

multi-kernel design to maximize the bandwidth, mainly targeting benefits of

multi DDR memory banks, approximate non-linear functions that exhibit minimal

accuracy degradation, and efficient use of available logic blocks on the FPGA,

and efficient compiler to maximize the performance and memory-efficiency of the

computing kernels by presenting a novel algorithm for design space exploration

to find optimal hardware configuration that achieves optimal throughput and

latency. Compared to the state-of-the-art ViT accelerators, CHOSEN achieves a

1.5x and 1.42x improvement in the throughput on the DeiT-S and DeiT-B models.

Source: http://arxiv.org/abs/2407.12736v1