Authors: Weicai Ye, Chenhao Ji, Zheng Chen, Junyao Gao, Xiaoshui Huang, Song-Hai Zhang, Wanli Ouyang, Tong He, Cairong Zhao, Guofeng Zhang

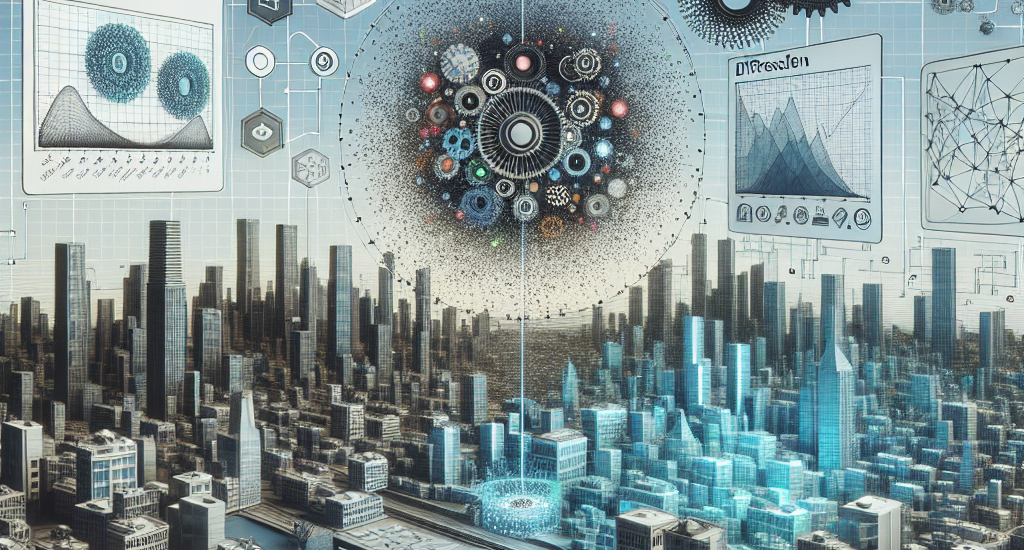

Abstract: Diffusion-based methods have achieved remarkable achievements in 2D image or

3D object generation, however, the generation of 3D scenes and even

$360^{\circ}$ images remains constrained, due to the limited number of scene

datasets, the complexity of 3D scenes themselves, and the difficulty of

generating consistent multi-view images. To address these issues, we first

establish a large-scale panoramic video-text dataset containing millions of

consecutive panoramic keyframes with corresponding panoramic depths, camera

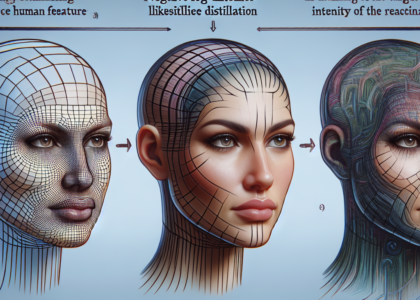

poses, and text descriptions. Then, we propose a novel text-driven panoramic

generation framework, termed DiffPano, to achieve scalable, consistent, and

diverse panoramic scene generation. Specifically, benefiting from the powerful

generative capabilities of stable diffusion, we fine-tune a single-view

text-to-panorama diffusion model with LoRA on the established panoramic

video-text dataset. We further design a spherical epipolar-aware multi-view

diffusion model to ensure the multi-view consistency of the generated panoramic

images. Extensive experiments demonstrate that DiffPano can generate scalable,

consistent, and diverse panoramic images with given unseen text descriptions

and camera poses.

Source: http://arxiv.org/abs/2410.24203v1