Authors: Minghao Ning, Ahmad Reza Alghooneh, Chen Sun, Ruihe Zhang, Pouya Panahandeh, Steven Tuer, Ehsan Hashemi, Amir Khajepour

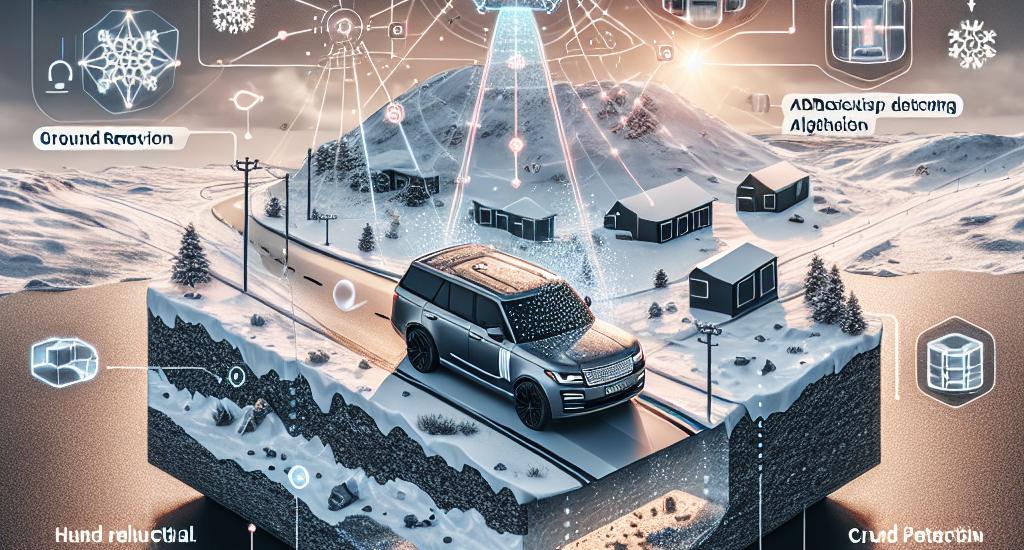

Abstract: In this paper, we propose an accurate and robust perception module for

Autonomous Vehicles (AVs) for drivable space extraction. Perception is crucial

in autonomous driving, where many deep learning-based methods, while accurate

on benchmark datasets, fail to generalize effectively, especially in diverse

and unpredictable environments. Our work introduces a robust easy-to-generalize

perception module that leverages LiDAR, camera, and HD map data fusion to

deliver a safe and reliable drivable space in all weather conditions. We

present an adaptive ground removal and curb detection method integrated with HD

map data for enhanced obstacle detection reliability. Additionally, we propose

an adaptive DBSCAN clustering algorithm optimized for precipitation noise, and

a cost-effective LiDAR-camera frustum association that is resilient to

calibration discrepancies. Our comprehensive drivable space representation

incorporates all perception data, ensuring compatibility with vehicle

dimensions and road regulations. This approach not only improves generalization

and efficiency, but also significantly enhances safety in autonomous vehicle

operations. Our approach is tested on a real dataset and its reliability is

verified during the daily (including harsh snowy weather) operation of our

autonomous shuttle, WATonoBus

Source: http://arxiv.org/abs/2410.22314v1