Authors: Bosong Ding, Murat Kirtay, Giacomo Spigler

Abstract: Head movements are crucial for social human-human interaction. They can

transmit important cues (e.g., joint attention, speaker detection) that cannot

be achieved with verbal interaction alone. This advantage also holds for

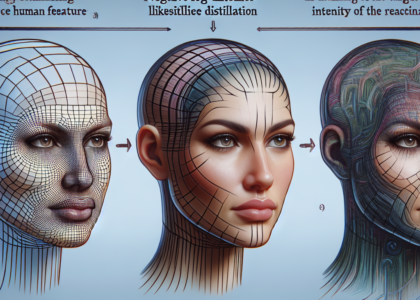

human-robot interaction. Even though modeling human motions through generative

AI models has become an active research area within robotics in recent years,

the use of these methods for producing head movements in human-robot

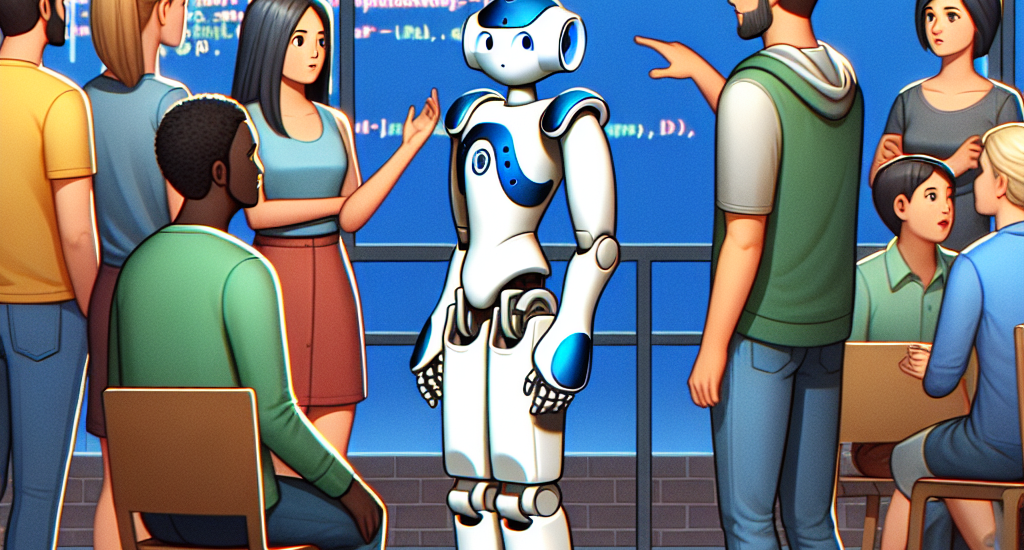

interaction remains underexplored. In this work, we employed a generative AI

pipeline to produce human-like head movements for a Nao humanoid robot. In

addition, we tested the system on a real-time active-speaker tracking task in a

group conversation setting. Overall, the results show that the Nao robot

successfully imitates human head movements in a natural manner while actively

tracking the speakers during the conversation. Code and data from this study

are available at https://github.com/dingdingding60/Humanoids2024HRI

Source: http://arxiv.org/abs/2407.11915v1