Authors: Zhenyu Jiang, Yuqi Xie, Jinhan Li, Ye Yuan, Yifeng Zhu, Yuke Zhu

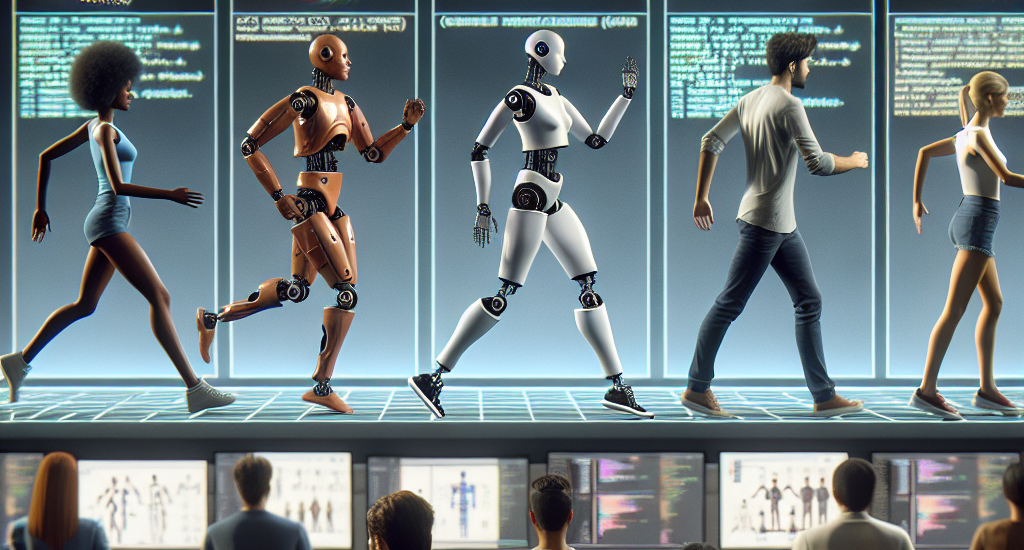

Abstract: Humanoid robots, with their human-like embodiment, have the potential to

integrate seamlessly into human environments. Critical to their coexistence and

cooperation with humans is the ability to understand natural language

communications and exhibit human-like behaviors. This work focuses on

generating diverse whole-body motions for humanoid robots from language

descriptions. We leverage human motion priors from extensive human motion

datasets to initialize humanoid motions and employ the commonsense reasoning

capabilities of Vision Language Models (VLMs) to edit and refine these motions.

Our approach demonstrates the capability to produce natural, expressive, and

text-aligned humanoid motions, validated through both simulated and real-world

experiments. More videos can be found at

https://ut-austin-rpl.github.io/Harmon/.

Source: http://arxiv.org/abs/2410.12773v1