Authors: Honghui Yang, Di Huang, Wei Yin, Chunhua Shen, Haifeng Liu, Xiaofei He, Binbin Lin, Wanli Ouyang, Tong He

Abstract: Video depth estimation has long been hindered by the scarcity of consistent

and scalable ground truth data, leading to inconsistent and unreliable results.

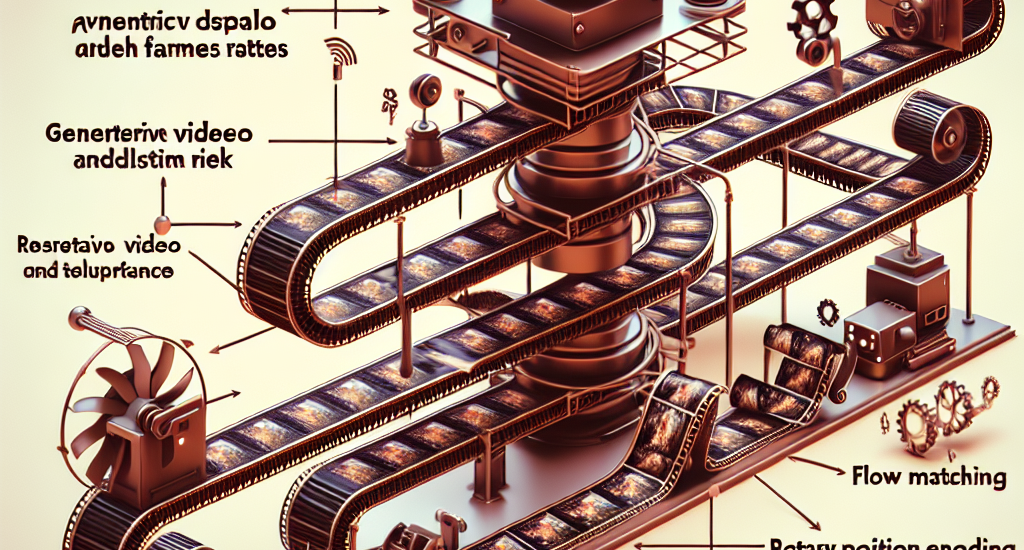

In this paper, we introduce Depth Any Video, a model that tackles the challenge

through two key innovations. First, we develop a scalable synthetic data

pipeline, capturing real-time video depth data from diverse synthetic

environments, yielding 40,000 video clips of 5-second duration, each with

precise depth annotations. Second, we leverage the powerful priors of

generative video diffusion models to handle real-world videos effectively,

integrating advanced techniques such as rotary position encoding and flow

matching to further enhance flexibility and efficiency. Unlike previous models,

which are limited to fixed-length video sequences, our approach introduces a

novel mixed-duration training strategy that handles videos of varying lengths

and performs robustly across different frame rates-even on single frames. At

inference, we propose a depth interpolation method that enables our model to

infer high-resolution video depth across sequences of up to 150 frames. Our

model outperforms all previous generative depth models in terms of spatial

accuracy and temporal consistency.

Source: http://arxiv.org/abs/2410.10815v1