Authors: Haoyi Zhu, Honghui Yang, Yating Wang, Jiange Yang, Limin Wang, Tong He

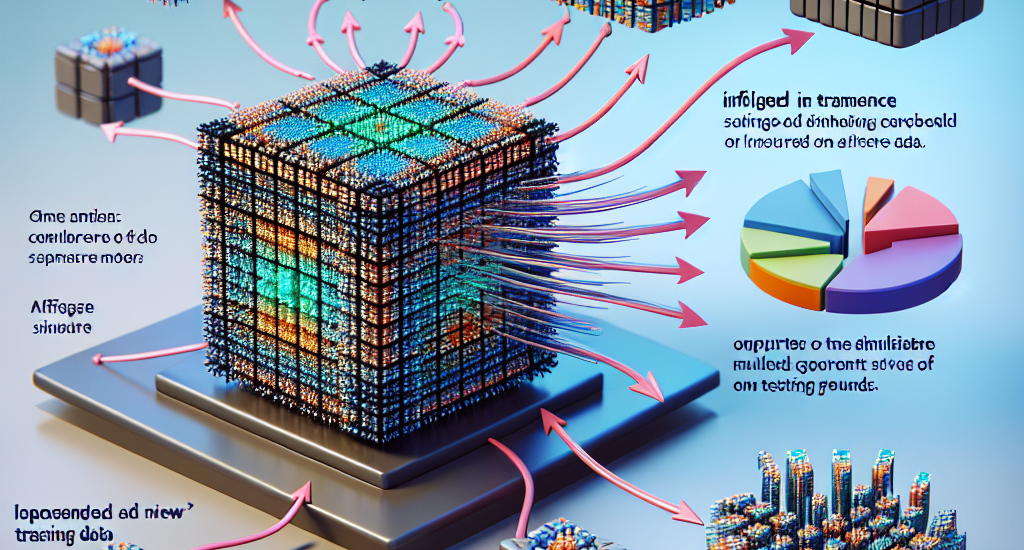

Abstract: In this paper, we introduce SPA, a novel representation learning framework

that emphasizes the importance of 3D spatial awareness in embodied AI. Our

approach leverages differentiable neural rendering on multi-view images to

endow a vanilla Vision Transformer (ViT) with intrinsic spatial understanding.

We present the most comprehensive evaluation of embodied representation

learning to date, covering 268 tasks across 8 simulators with diverse policies

in both single-task and language-conditioned multi-task scenarios. The results

are compelling: SPA consistently outperforms more than 10 state-of-the-art

representation methods, including those specifically designed for embodied AI,

vision-centric tasks, and multi-modal applications, while using less training

data. Furthermore, we conduct a series of real-world experiments to confirm its

effectiveness in practical scenarios. These results highlight the critical role

of 3D spatial awareness for embodied representation learning. Our strongest

model takes more than 6000 GPU hours to train and we are committed to

open-sourcing all code and model weights to foster future research in embodied

representation learning. Project Page: https://haoyizhu.github.io/spa/.

Source: http://arxiv.org/abs/2410.08208v1