Authors: Xiaopan Zhang, Hao Qin, Fuquan Wang, Yue Dong, Jiachen Li

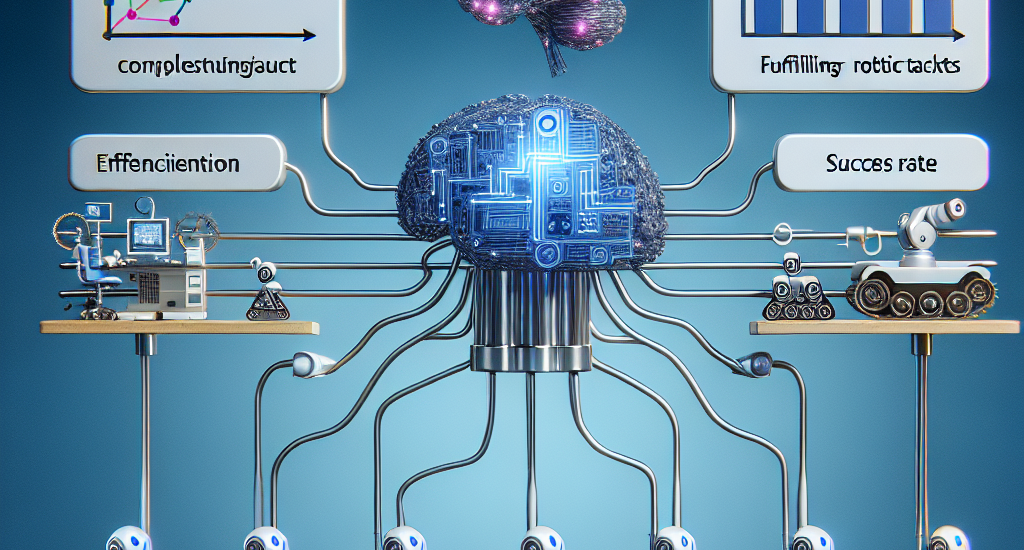

Abstract: Language models (LMs) possess a strong capability to comprehend natural

language, making them effective in translating human instructions into detailed

plans for simple robot tasks. Nevertheless, it remains a significant challenge

to handle long-horizon tasks, especially in subtask identification and

allocation for cooperative heterogeneous robot teams. To address this issue, we

propose a Language Model-Driven Multi-Agent PDDL Planner (LaMMA-P), a novel

multi-agent task planning framework that achieves state-of-the-art performance

on long-horizon tasks. LaMMA-P integrates the strengths of the LMs’ reasoning

capability and the traditional heuristic search planner to achieve a high

success rate and efficiency while demonstrating strong generalization across

tasks. Additionally, we create MAT-THOR, a comprehensive benchmark that

features household tasks with two different levels of complexity based on the

AI2-THOR environment. The experimental results demonstrate that LaMMA-P

achieves a 105% higher success rate and 36% higher efficiency than existing

LM-based multi-agent planners. The experimental videos, code, and datasets of

this work as well as the detailed prompts used in each module are available at

https://lamma-p.github.io.

Source: http://arxiv.org/abs/2409.20560v1