Authors: Andrew Goldberg, Kavish Kondap, Tianshuang Qiu, Zehan Ma, Letian Fu, Justin Kerr, Huang Huang, Kaiyuan Chen, Kuan Fang, Ken Goldberg

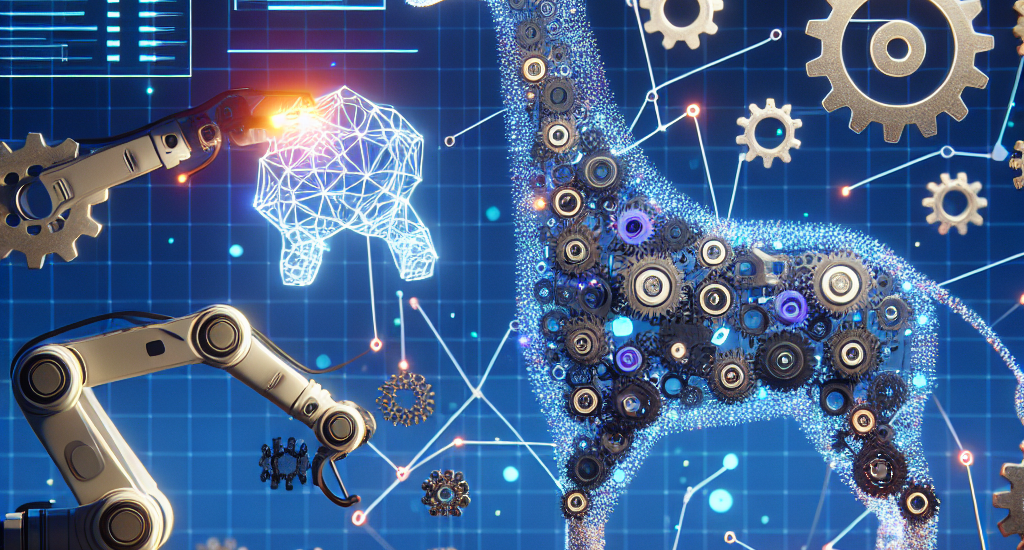

Abstract: Generative AI systems have shown impressive capabilities in creating text,

code, and images. Inspired by the rich history of research in industrial

”Design for Assembly”, we introduce a novel problem: Generative

Design-for-Robot-Assembly (GDfRA). The task is to generate an assembly based on

a natural language prompt (e.g., ”giraffe”) and an image of available

physical components, such as 3D-printed blocks. The output is an assembly, a

spatial arrangement of these components, and instructions for a robot to build

this assembly. The output must 1) resemble the requested object and 2) be

reliably assembled by a 6 DoF robot arm with a suction gripper. We then present

Blox-Net, a GDfRA system that combines generative vision language models with

well-established methods in computer vision, simulation, perturbation analysis,

motion planning, and physical robot experimentation to solve a class of GDfRA

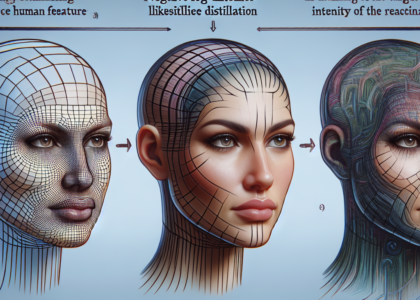

problems with minimal human supervision. Blox-Net achieved a Top-1 accuracy of

63.5% in the ”recognizability” of its designed assemblies (eg, resembling

giraffe as judged by a VLM). These designs, after automated perturbation

redesign, were reliably assembled by a robot, achieving near-perfect success

across 10 consecutive assembly iterations with human intervention only during

reset prior to assembly. Surprisingly, this entire design process from textual

word (”giraffe”) to reliable physical assembly is performed with zero human

intervention.

Source: http://arxiv.org/abs/2409.17126v1