Authors: Mohan Shi, Zengrui Jin, Yaoxun Xu, Yong Xu, Shi-Xiong Zhang, Kun Wei, Yiwen Shao, Chunlei Zhang, Dong Yu

Abstract: Recognizing overlapping speech from multiple speakers in conversational

scenarios is one of the most challenging problem for automatic speech

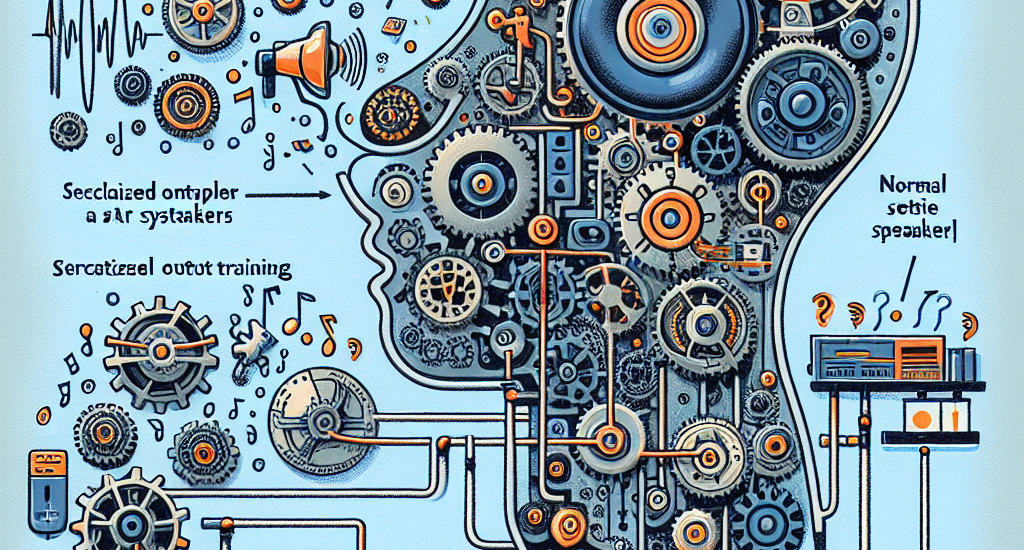

recognition (ASR). Serialized output training (SOT) is a classic method to

address multi-talker ASR, with the idea of concatenating transcriptions from

multiple speakers according to the emission times of their speech for training.

However, SOT-style transcriptions, derived from concatenating multiple related

utterances in a conversation, depend significantly on modeling long contexts.

Therefore, compared to traditional methods that primarily emphasize encoder

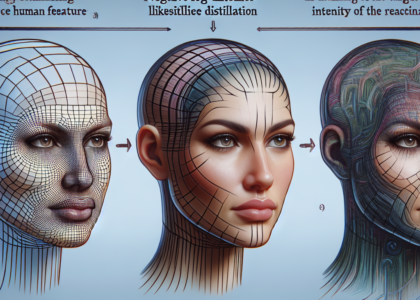

performance in attention-based encoder-decoder (AED) architectures, a novel

approach utilizing large language models (LLMs) that leverages the capabilities

of pre-trained decoders may be better suited for such complex and challenging

scenarios. In this paper, we propose an LLM-based SOT approach for multi-talker

ASR, leveraging pre-trained speech encoder and LLM, fine-tuning them on

multi-talker dataset using appropriate strategies. Experimental results

demonstrate that our approach surpasses traditional AED-based methods on the

simulated dataset LibriMix and achieves state-of-the-art performance on the

evaluation set of the real-world dataset AMI, outperforming the AED model

trained with 1000 times more supervised data in previous works.

Source: http://arxiv.org/abs/2408.17431v1