Authors: Mustafa Omer Gul, Yoav Artzi

Abstract: Systems with both language comprehension and generation capabilities can

benefit from the tight connection between the two. This work studies coupling

comprehension and generation with focus on continually learning from

interaction with users. We propose techniques to tightly integrate the two

capabilities for both learning and inference. We situate our studies in

two-player reference games, and deploy various models for thousands of

interactions with human users, while learning from interaction feedback

signals. We show dramatic improvements in performance over time, with

comprehension-generation coupling leading to performance improvements up to 26%

in absolute terms and up to 17% higher accuracies compared to a non-coupled

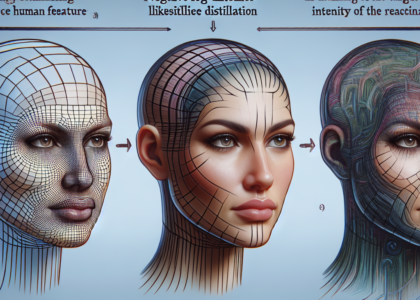

system. Our analysis also shows coupling has substantial qualitative impact on

the system’s language, making it significantly more human-like.

Source: http://arxiv.org/abs/2408.15992v1