Authors: Yuxiao Qu, Tianjun Zhang, Naman Garg, Aviral Kumar

Abstract: A central piece in enabling intelligent agentic behavior in foundation models

is to make them capable of introspecting upon their behavior, reasoning, and

correcting their mistakes as more computation or interaction is available. Even

the strongest proprietary large language models (LLMs) do not quite exhibit the

ability of continually improving their responses sequentially, even in

scenarios where they are explicitly told that they are making a mistake. In

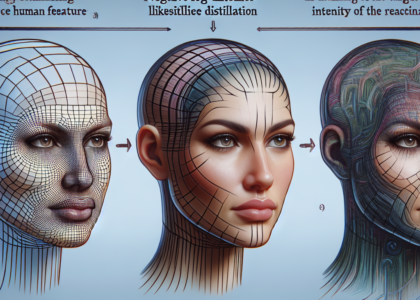

this paper, we develop RISE: Recursive IntroSpEction, an approach for

fine-tuning LLMs to introduce this capability, despite prior work hypothesizing

that this capability may not be possible to attain. Our approach prescribes an

iterative fine-tuning procedure, which attempts to teach the model how to alter

its response after having executed previously unsuccessful attempts to solve a

hard test-time problem, with optionally additional environment feedback. RISE

poses fine-tuning for a single-turn prompt as solving a multi-turn Markov

decision process (MDP), where the initial state is the prompt. Inspired by

principles in online imitation learning and reinforcement learning, we propose

strategies for multi-turn data collection and training so as to imbue an LLM

with the capability to recursively detect and correct its previous mistakes in

subsequent iterations. Our experiments show that RISE enables Llama2, Llama3,

and Mistral models to improve themselves with more turns on math reasoning

tasks, outperforming several single-turn strategies given an equal amount of

inference-time computation. We also find that RISE scales well, often attaining

larger benefits with more capable models. Our analysis shows that RISE makes

meaningful improvements to responses to arrive at the correct solution for

challenging prompts, without disrupting one-turn abilities as a result of

expressing more complex distributions.

Source: http://arxiv.org/abs/2407.18219v1