Authors: Markus Iser

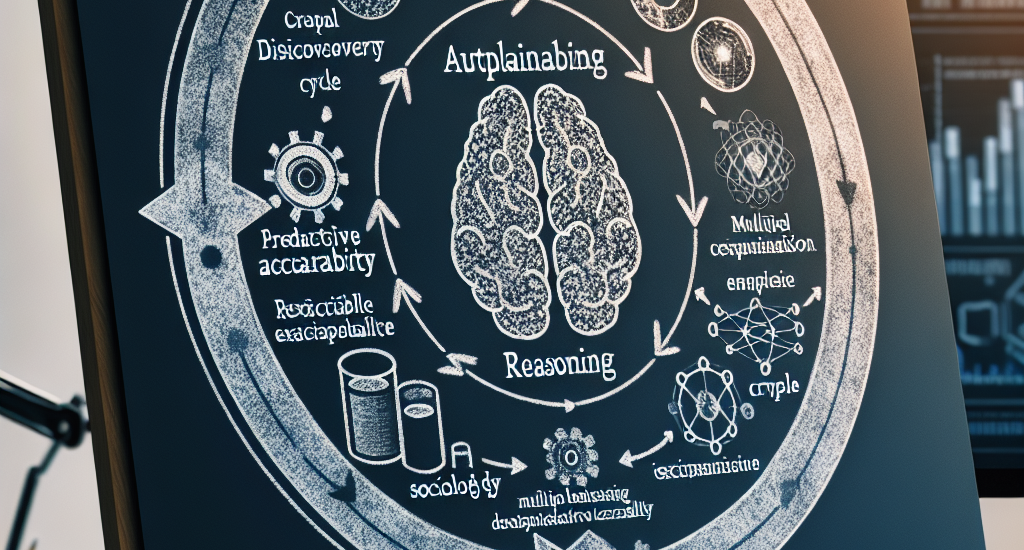

Abstract: Automated reasoning is a key technology in the young but rapidly growing

field of Explainable Artificial Intelligence (XAI). Explanability helps build

trust in artificial intelligence systems beyond their mere predictive accuracy

and robustness. In this paper, we propose a cycle of scientific discovery that

combines machine learning with automated reasoning for the generation and the

selection of explanations. We present a taxonomy of explanation selection

problems that draws on insights from sociology and cognitive science. These

selection criteria subsume existing notions and extend them with new

properties.

Source: http://arxiv.org/abs/2407.17454v1