Authors: Xinjie Liu, Cyrus Neary, Kushagra Gupta, Christian Ellis, Ufuk Topcu, David Fridovich-Keil

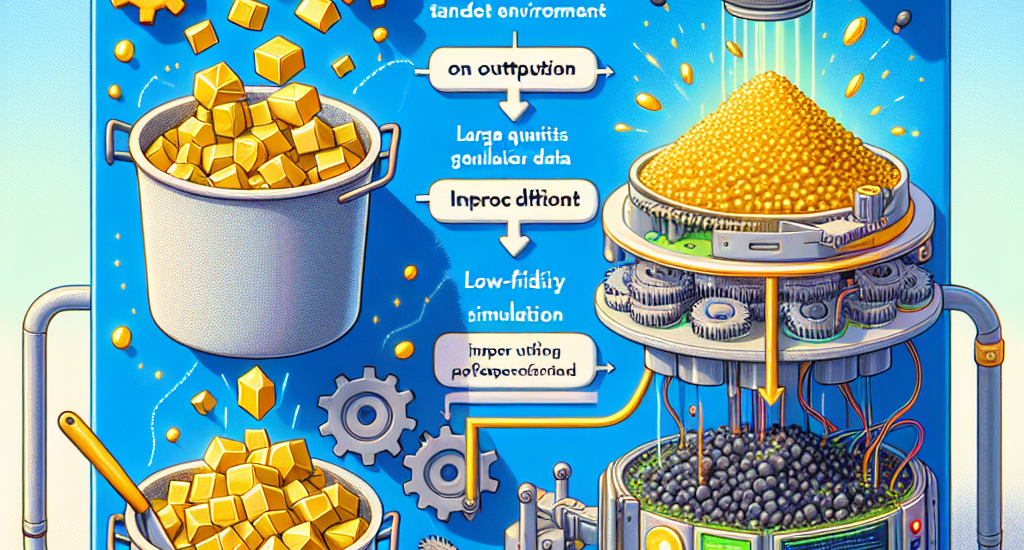

Abstract: Many reinforcement learning (RL) algorithms require large amounts of data,

prohibiting their use in applications where frequent interactions with

operational systems are infeasible, or high-fidelity simulations are expensive

or unavailable. Meanwhile, low-fidelity simulators–such as reduced-order

models, heuristic reward functions, or generative world models–can cheaply

provide useful data for RL training, even if they are too coarse for direct

sim-to-real transfer. We propose multi-fidelity policy gradients (MFPGs), an RL

framework that mixes a small amount of data from the target environment with a

large volume of low-fidelity simulation data to form unbiased, reduced-variance

estimators (control variates) for on-policy policy gradients. We instantiate

the framework by developing multi-fidelity variants of two policy gradient

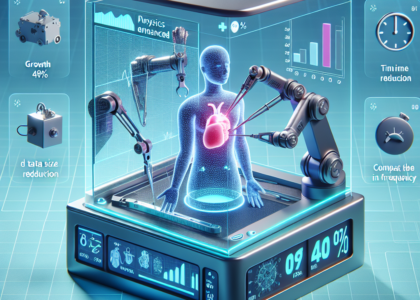

algorithms: REINFORCE and proximal policy optimization. Experimental results

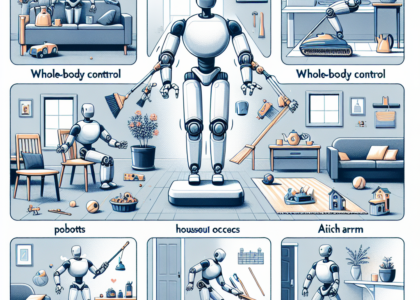

across a suite of simulated robotics benchmark problems demonstrate that when

target-environment samples are limited, MFPG achieves up to 3.9x higher reward

and improves training stability when compared to baselines that only use

high-fidelity data. Moreover, even when the baselines are given more

high-fidelity samples–up to 10x as many interactions with the target

environment–MFPG continues to match or outperform them. Finally, we observe

that MFPG is capable of training effective policies even when the low-fidelity

environment is drastically different from the target environment. MFPG thus not

only offers a novel paradigm for efficient sim-to-real transfer but also

provides a principled approach to managing the trade-off between policy

performance and data collection costs.

Source: http://arxiv.org/abs/2503.05696v1