Authors: Yuhao Wu, Yushi Bai, Zhiqing Hu, Shangqing Tu, Ming Shan Hee, Juanzi Li, Roy Ka-Wei Lee

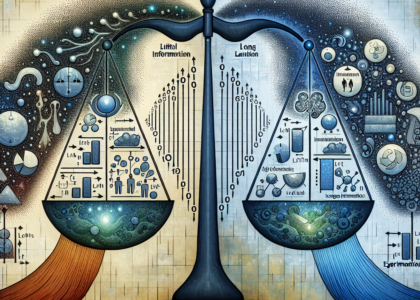

Abstract: Recent advancements in long-context Large Language Models (LLMs) have

primarily concentrated on processing extended input contexts, resulting in

significant strides in long-context comprehension. However, the equally

critical aspect of generating long-form outputs has received comparatively less

attention. This paper advocates for a paradigm shift in NLP research toward

addressing the challenges of long-output generation. Tasks such as novel

writing, long-term planning, and complex reasoning require models to understand

extensive contexts and produce coherent, contextually rich, and logically

consistent extended text. These demands highlight a critical gap in current LLM

capabilities. We underscore the importance of this under-explored domain and

call for focused efforts to develop foundational LLMs tailored for generating

high-quality, long-form outputs, which hold immense potential for real-world

applications.

Source: http://arxiv.org/abs/2503.04723v1