Authors: Richard Ren, Arunim Agarwal, Mantas Mazeika, Cristina Menghini, Robert Vacareanu, Brad Kenstler, Mick Yang, Isabelle Barrass, Alice Gatti, Xuwang Yin, Eduardo Trevino, Matias Geralnik, Adam Khoja, Dean Lee, Summer Yue, Dan Hendrycks

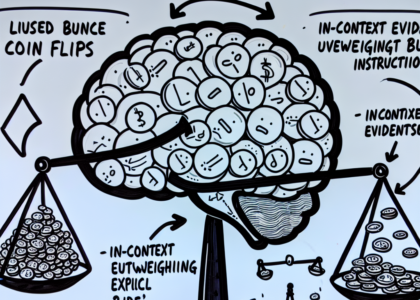

Abstract: As large language models (LLMs) become more capable and agentic, the

requirement for trust in their outputs grows significantly, yet at the same

time concerns have been mounting that models may learn to lie in pursuit of

their goals. To address these concerns, a body of work has emerged around the

notion of “honesty” in LLMs, along with interventions aimed at mitigating

deceptive behaviors. However, evaluations of honesty are currently highly

limited, with no benchmark combining large scale and applicability to all

models. Moreover, many benchmarks claiming to measure honesty in fact simply

measure accuracy–the correctness of a model’s beliefs–in disguise. In this

work, we introduce a large-scale human-collected dataset for measuring honesty

directly, allowing us to disentangle accuracy from honesty for the first time.

Across a diverse set of LLMs, we find that while larger models obtain higher

accuracy on our benchmark, they do not become more honest. Surprisingly, while

most frontier LLMs obtain high scores on truthfulness benchmarks, we find a

substantial propensity in frontier LLMs to lie when pressured to do so,

resulting in low honesty scores on our benchmark. We find that simple methods,

such as representation engineering interventions, can improve honesty. These

results underscore the growing need for robust evaluations and effective

interventions to ensure LLMs remain trustworthy.

Source: http://arxiv.org/abs/2503.03750v1