Authors: Amal Shaheena, Nairouz Mrabahb, Riadh Ksantinia, Abdulla Alqaddoumia

Abstract: The recent advances in deep clustering have been made possible by significant

progress in self-supervised and pseudo-supervised learning. However, the

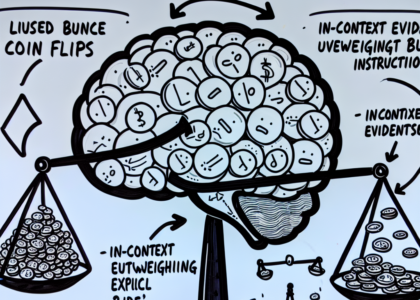

trade-off between self-supervision and pseudo-supervision can give rise to

three primary issues. The joint training causes Feature Randomness and Feature

Drift, whereas the independent training causes Feature Randomness and Feature

Twist. In essence, using pseudo-labels generates random and unreliable

features. The combination of pseudo-supervision and self-supervision drifts the

reliable clustering-oriented features. Moreover, moving from self-supervision

to pseudo-supervision can twist the curved latent manifolds. This paper

addresses the limitations of existing deep clustering paradigms concerning

Feature Randomness, Feature Drift, and Feature Twist. We propose a new paradigm

with a new strategy that replaces pseudo-supervision with a second round of

self-supervision training. The new strategy makes the transition between

instance-level self-supervision and neighborhood-level self-supervision

smoother and less abrupt. Moreover, it prevents the drifting effect that is

caused by the strong competition between instance-level self-supervision and

clustering-level pseudo-supervision. Moreover, the absence of the

pseudo-supervision prevents the risk of generating random features. With this

novel approach, our paper introduces a Rethinking of the Deep Clustering

Paradigms, denoted by R-DC. Our model is specifically designed to address three

primary challenges encountered in Deep Clustering: Feature Randomness, Feature

Drift, and Feature Twist. Experimental results conducted on six datasets have

shown that the two-level self-supervision training yields substantial

improvements.

Source: http://arxiv.org/abs/2503.03733v1