Authors: Yuqi Zhou, Shuai Wang, Sunhao Dai, Qinglin Jia, Zhaocheng Du, Zhenhua Dong, Jun Xu

Abstract: The advancement of visual language models (VLMs) has enhanced mobile device

operations, allowing simulated human-like actions to address user requirements.

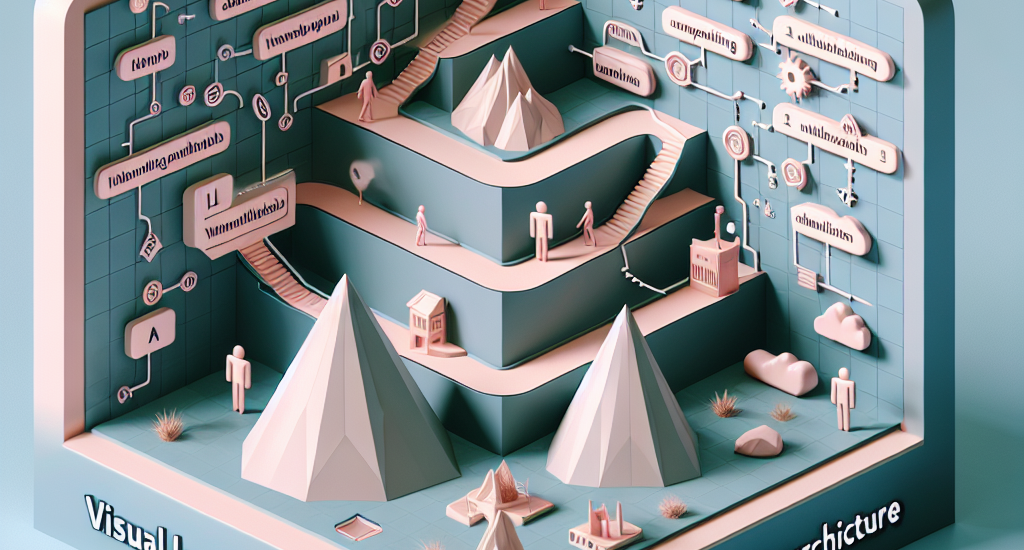

Current VLM-based mobile operating assistants can be structured into three

levels: task, subtask, and action. The subtask level, linking high-level goals

with low-level executable actions, is crucial for task completion but faces two

challenges: ineffective subtasks that lower-level agent cannot execute and

inefficient subtasks that fail to contribute to the completion of the

higher-level task. These challenges stem from VLM’s lack of experience in

decomposing subtasks within GUI scenarios in multi-agent architecture. To

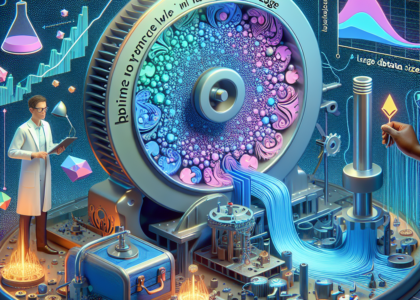

address these, we propose a new mobile assistant architecture with constrained

high-frequency o}ptimized planning (CHOP). Our approach overcomes the VLM’s

deficiency in GUI scenarios planning by using human-planned subtasks as the

basis vector. We evaluate our architecture in both English and Chinese contexts

across 20 Apps, demonstrating significant improvements in both effectiveness

and efficiency. Our dataset and code is available at

https://github.com/Yuqi-Zhou/CHOP

Source: http://arxiv.org/abs/2503.03743v1