Authors: Han Xue, Jieji Ren, Wendi Chen, Gu Zhang, Yuan Fang, Guoying Gu, Huazhe Xu, Cewu Lu

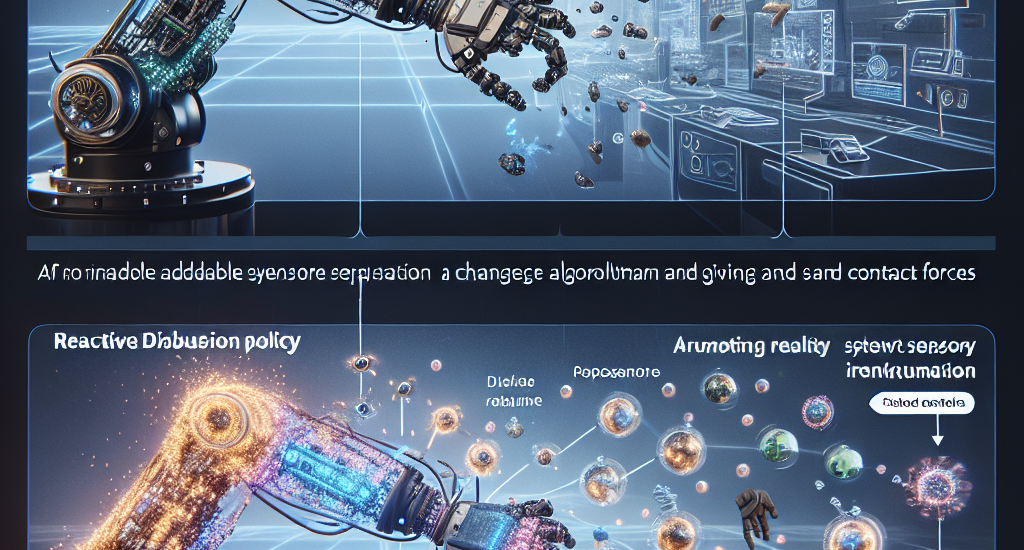

Abstract: Humans can accomplish complex contact-rich tasks using vision and touch, with

highly reactive capabilities such as quick adjustments to environmental changes

and adaptive control of contact forces; however, this remains challenging for

robots. Existing visual imitation learning (IL) approaches rely on action

chunking to model complex behaviors, which lacks the ability to respond

instantly to real-time tactile feedback during the chunk execution.

Furthermore, most teleoperation systems struggle to provide fine-grained

tactile / force feedback, which limits the range of tasks that can be

performed. To address these challenges, we introduce TactAR, a low-cost

teleoperation system that provides real-time tactile feedback through Augmented

Reality (AR), along with Reactive Diffusion Policy (RDP), a novel slow-fast

visual-tactile imitation learning algorithm for learning contact-rich

manipulation skills. RDP employs a two-level hierarchy: (1) a slow latent

diffusion policy for predicting high-level action chunks in latent space at low

frequency, (2) a fast asymmetric tokenizer for closed-loop tactile feedback

control at high frequency. This design enables both complex trajectory modeling

and quick reactive behavior within a unified framework. Through extensive

evaluation across three challenging contact-rich tasks, RDP significantly

improves performance compared to state-of-the-art visual IL baselines through

rapid response to tactile / force feedback. Furthermore, experiments show that

RDP is applicable across different tactile / force sensors. Code and videos are

available on https://reactive-diffusion-policy.github.io/.

Source: http://arxiv.org/abs/2503.02881v1