Authors: Ethan Mendes, Alan Ritter

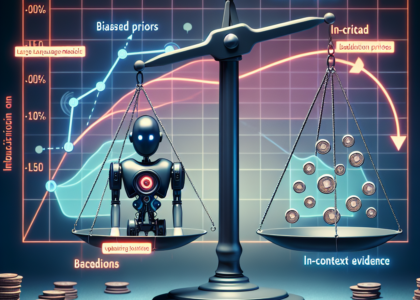

Abstract: Collecting ground truth task completion rewards or human demonstrations for

multi-step reasoning tasks is often cost-prohibitive and time-consuming,

especially in interactive domains like web tasks. To address this bottleneck,

we present self-taught lookahead, a self-supervised method that leverages

state-transition dynamics to train a value model capable of effectively guiding

language model-controlled search. We find that moderately sized (8 billion

parameters) open-weight value models improved with self-taught lookahead can

match the performance of using a frontier LLM such as gpt-4o as the value

model. Furthermore, we find that self-taught lookahead improves performance by

20% while reducing costs 37x compared to previous LLM-based tree search,

without relying on ground truth rewards.

Source: http://arxiv.org/abs/2503.02878v1