Authors: Zongkai Zhao, Guozeng Xu, Xiuhua Li, Kaiwen Wei, Jiang Zhong

Abstract: Locate-then-Edit Knowledge Editing (LEKE) is a key technique for updating

large language models (LLMs) without full retraining. However, existing methods

assume a single-user setting and become inefficient in real-world multi-client

scenarios, where decentralized organizations (e.g., hospitals, financial

institutions) independently update overlapping knowledge, leading to redundant

mediator knowledge vector (MKV) computations and privacy concerns. To address

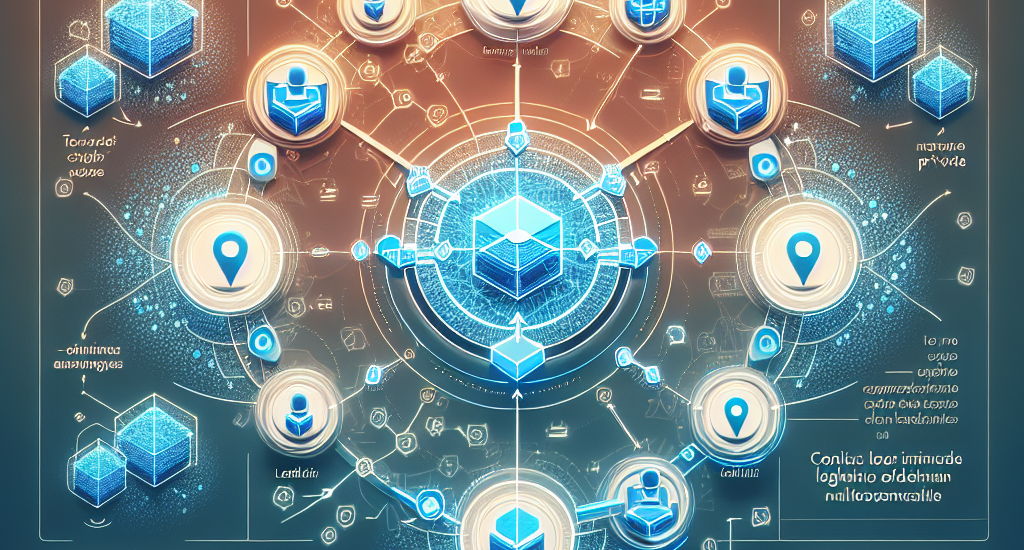

these challenges, we introduce Federated Locate-then-Edit Knowledge Editing

(FLEKE), a novel task that enables multiple clients to collaboratively perform

LEKE while preserving privacy and reducing computational overhead. To achieve

this, we propose FedEdit, a two-stage framework that optimizes MKV selection

and reuse. In the first stage, clients locally apply LEKE and upload the

computed MKVs. In the second stage, rather than relying solely on server-based

MKV sharing, FLEKE allows clients retrieve relevant MKVs based on cosine

similarity, enabling knowledge re-edit and minimizing redundant computations.

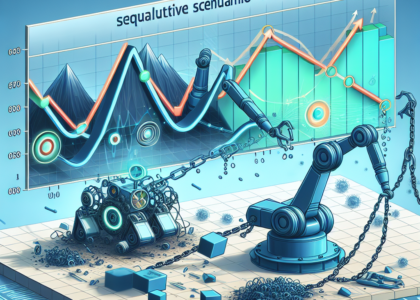

Experimental results on two benchmark datasets demonstrate that FedEdit retains

over 96% of the performance of non-federated LEKE while significantly

outperforming a FedAvg-based baseline by approximately twofold. Besides, we

find that MEMIT performs more consistently than PMET in the FLEKE task with our

FedEdit framework. Our code is available at https://github.com/zongkaiz/FLEKE.

Source: http://arxiv.org/abs/2502.15677v1