Authors: Junpeng Zhang, Lei Cheng, Qing Li, Liang Lin, Quanshi Zhang

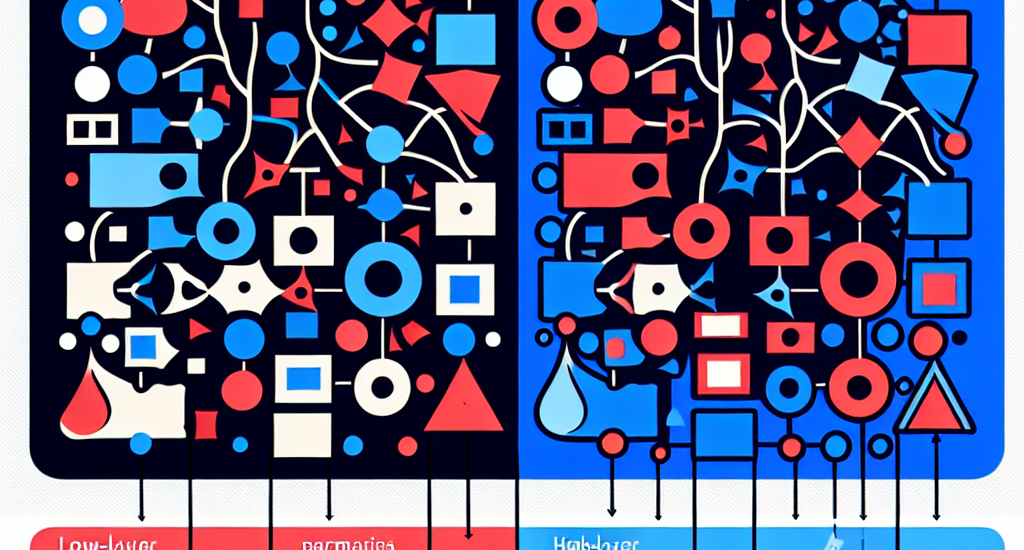

Abstract: In this paper, we find that the complexity of interactions encoded by a deep

neural network (DNN) can explain its generalization power. We also discover

that the confusing samples of a DNN, which are represented by non-generalizable

interactions, are determined by its low-layer parameters. In comparison, other

factors, such as high-layer parameters and network architecture, have much less

impact on the composition of confusing samples. Two DNNs with different

low-layer parameters usually have fully different sets of confusing samples,

even though they have similar performance. This finding extends the

understanding of the lottery ticket hypothesis, and well explains distinctive

representation power of different DNNs.

Source: http://arxiv.org/abs/2502.08625v1