Authors: Mengchen Fan, Keren Li, Tianyun Zhang, Qing Tian, Baocheng Geng

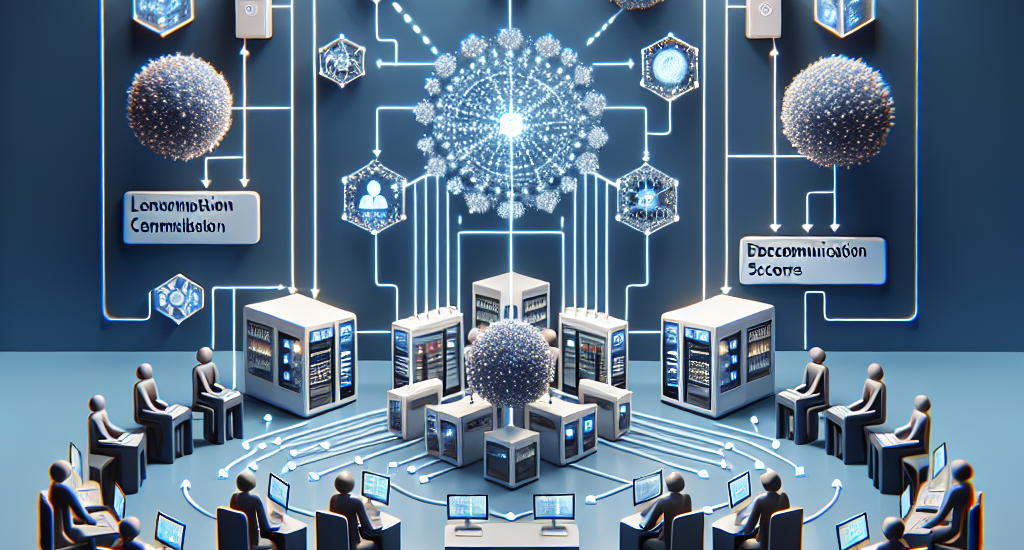

Abstract: Distributed Learning (DL) enables the training of machine learning models

across multiple devices, yet it faces challenges like non-IID data

distributions and device capability disparities, which can impede training

efficiency. Communication bottlenecks further complicate traditional Federated

Learning (FL) setups. To mitigate these issues, we introduce the Personalized

Federated Learning with Decentralized Selection Training (PFedDST) framework.

PFedDST enhances model training by allowing devices to strategically evaluate

and select peers based on a comprehensive communication score. This score

integrates loss, task similarity, and selection frequency, ensuring optimal

peer connections. This selection strategy is tailored to increase local

personalization and promote beneficial peer collaborations to strengthen the

stability and efficiency of the training process. Our experiments demonstrate

that PFedDST not only enhances model accuracy but also accelerates convergence.

This approach outperforms state-of-the-art methods in handling data

heterogeneity, delivering both faster and more effective training in diverse

and decentralized systems.

Source: http://arxiv.org/abs/2502.07750v1