Authors: Zhengxuan Wu, Aryaman Arora, Atticus Geiger, Zheng Wang, Jing Huang, Dan Jurafsky, Christopher D. Manning, Christopher Potts

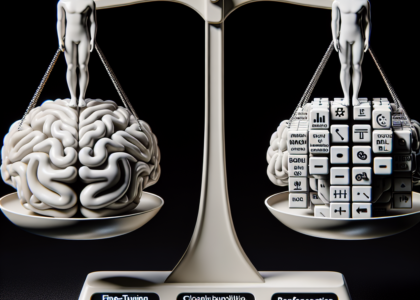

Abstract: Fine-grained steering of language model outputs is essential for safety and

reliability. Prompting and finetuning are widely used to achieve these goals,

but interpretability researchers have proposed a variety of

representation-based techniques as well, including sparse autoencoders (SAEs),

linear artificial tomography, supervised steering vectors, linear probes, and

representation finetuning. At present, there is no benchmark for making direct

comparisons between these proposals. Therefore, we introduce AxBench, a

large-scale benchmark for steering and concept detection, and report

experiments on Gemma-2-2B and 9B. For steering, we find that prompting

outperforms all existing methods, followed by finetuning. For concept

detection, representation-based methods such as difference-in-means, perform

the best. On both evaluations, SAEs are not competitive. We introduce a novel

weakly-supervised representational method (Rank-1 Representation Finetuning;

ReFT-r1), which is competitive on both tasks while providing the

interpretability advantages that prompting lacks. Along with AxBench, we train

and publicly release SAE-scale feature dictionaries for ReFT-r1 and DiffMean.

Source: http://arxiv.org/abs/2501.17148v1