Authors: Tzoulio Chamiti, Nikolaos Passalis, Anastasios Tefas

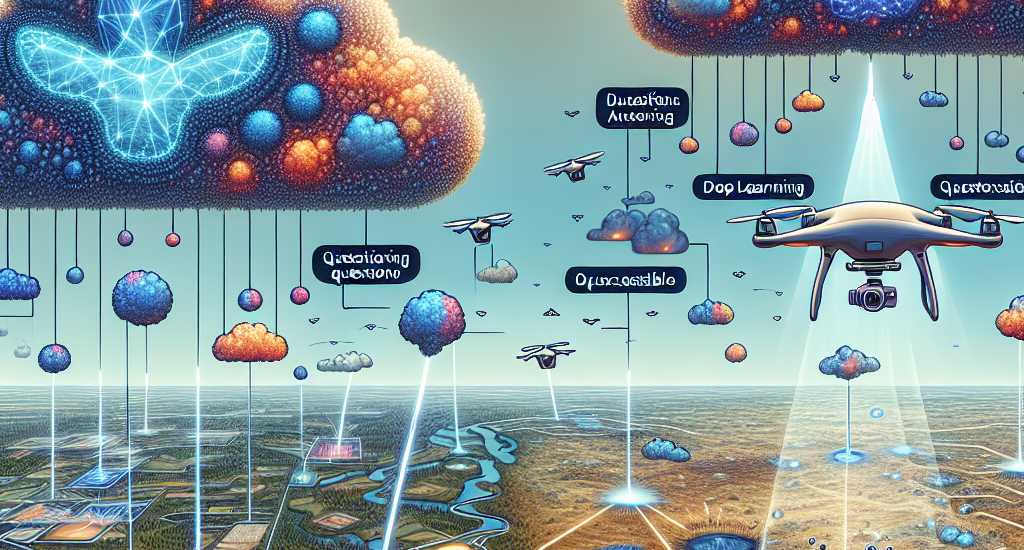

Abstract: Autonomous aerial monitoring is an important task aimed at gathering

information from areas that may not be easily accessible by humans. At the same

time, this task often requires recognizing anomalies from a significant

distance or not previously encountered in the past. In this paper, we propose a

novel framework that leverages the advanced capabilities provided by Large

Language Models (LLMs) to actively collect information and perform anomaly

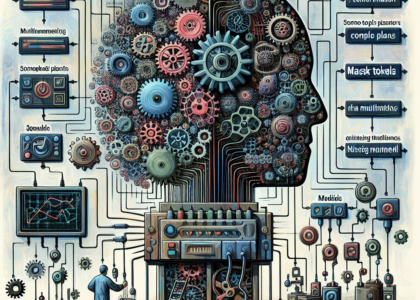

detection in novel scenes. To this end, we propose an LLM based model dialogue

approach, in which two deep learning models engage in a dialogue to actively

control a drone to increase perception and anomaly detection accuracy. We

conduct our experiments in a high fidelity simulation environment where an LLM

is provided with a predetermined set of natural language movement commands

mapped into executable code functions. Additionally, we deploy a multimodal

Visual Question Answering (VQA) model charged with the task of visual question

answering and captioning. By engaging the two models in conversation, the LLM

asks exploratory questions while simultaneously flying a drone into different

parts of the scene, providing a novel way to implement active perception. By

leveraging LLMs reasoning ability, we output an improved detailed description

of the scene going beyond existing static perception approaches. In addition to

information gathering, our approach is utilized for anomaly detection and our

results demonstrate the proposed methods effectiveness in informing and

alerting about potential hazards.

Source: http://arxiv.org/abs/2501.16300v1