Authors: Runtao Liu, Haoyu Wu, Zheng Ziqiang, Chen Wei, Yingqing He, Renjie Pi, Qifeng Chen

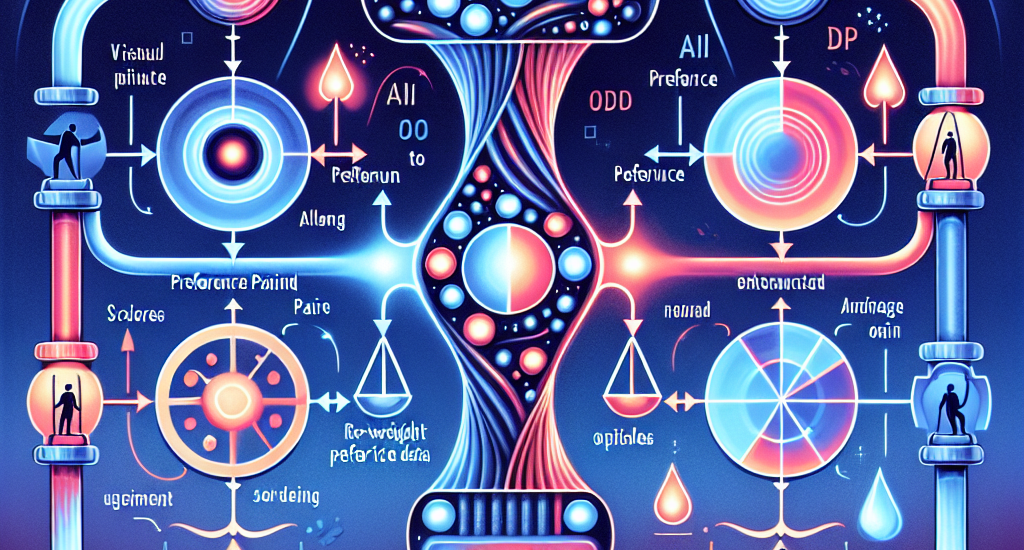

Abstract: Recent progress in generative diffusion models has greatly advanced

text-to-video generation. While text-to-video models trained on large-scale,

diverse datasets can produce varied outputs, these generations often deviate

from user preferences, highlighting the need for preference alignment on

pre-trained models. Although Direct Preference Optimization (DPO) has

demonstrated significant improvements in language and image generation, we

pioneer its adaptation to video diffusion models and propose a VideoDPO

pipeline by making several key adjustments. Unlike previous image alignment

methods that focus solely on either (i) visual quality or (ii) semantic

alignment between text and videos, we comprehensively consider both dimensions

and construct a preference score accordingly, which we term the OmniScore. We

design a pipeline to automatically collect preference pair data based on the

proposed OmniScore and discover that re-weighting these pairs based on the

score significantly impacts overall preference alignment. Our experiments

demonstrate substantial improvements in both visual quality and semantic

alignment, ensuring that no preference aspect is neglected. Code and data will

be shared at https://videodpo.github.io/.

Source: http://arxiv.org/abs/2412.14167v1