Authors: Yuanhui Huang, Wenzhao Zheng, Yuan Gao, Xin Tao, Pengfei Wan, Di Zhang, Jie Zhou, Jiwen Lu

Abstract: Video generation models (VGMs) have received extensive attention recently and

serve as promising candidates for general-purpose large vision models. While

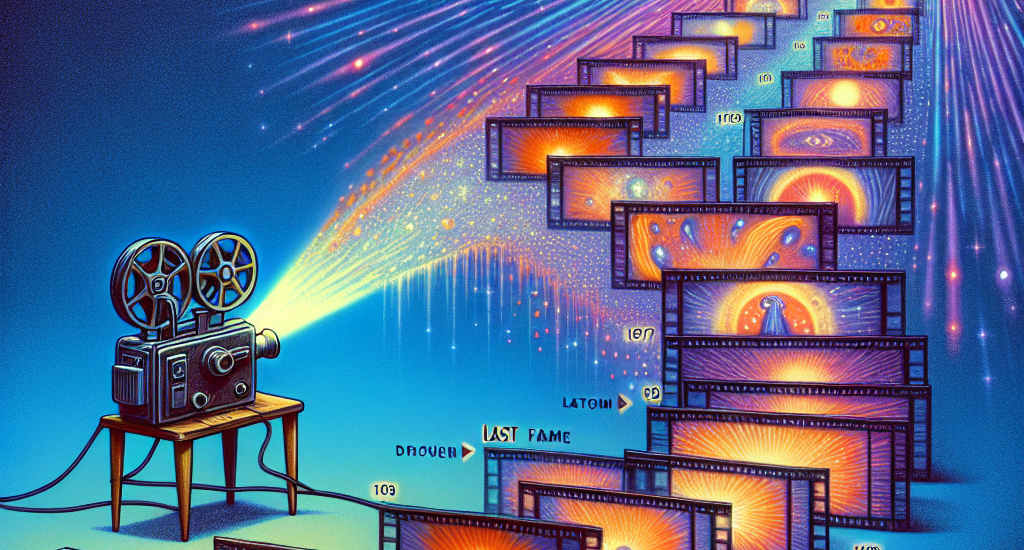

they can only generate short videos each time, existing methods achieve long

video generation by iteratively calling the VGMs, using the last-frame output

as the condition for the next-round generation. However, the last frame only

contains short-term fine-grained information about the scene, resulting in

inconsistency in the long horizon. To address this, we propose an Omni World

modeL (Owl-1) to produce long-term coherent and comprehensive conditions for

consistent long video generation. As videos are observations of the underlying

evolving world, we propose to model the long-term developments in a latent

space and use VGMs to film them into videos. Specifically, we represent the

world with a latent state variable which can be decoded into explicit video

observations. These observations serve as a basis for anticipating temporal

dynamics which in turn update the state variable. The interaction between

evolving dynamics and persistent state enhances the diversity and consistency

of the long videos. Extensive experiments show that Owl-1 achieves comparable

performance with SOTA methods on VBench-I2V and VBench-Long, validating its

ability to generate high-quality video observations. Code:

https://github.com/huang-yh/Owl.

Source: http://arxiv.org/abs/2412.09600v1