Authors: Vincent Tao Hu, Björn Ommer

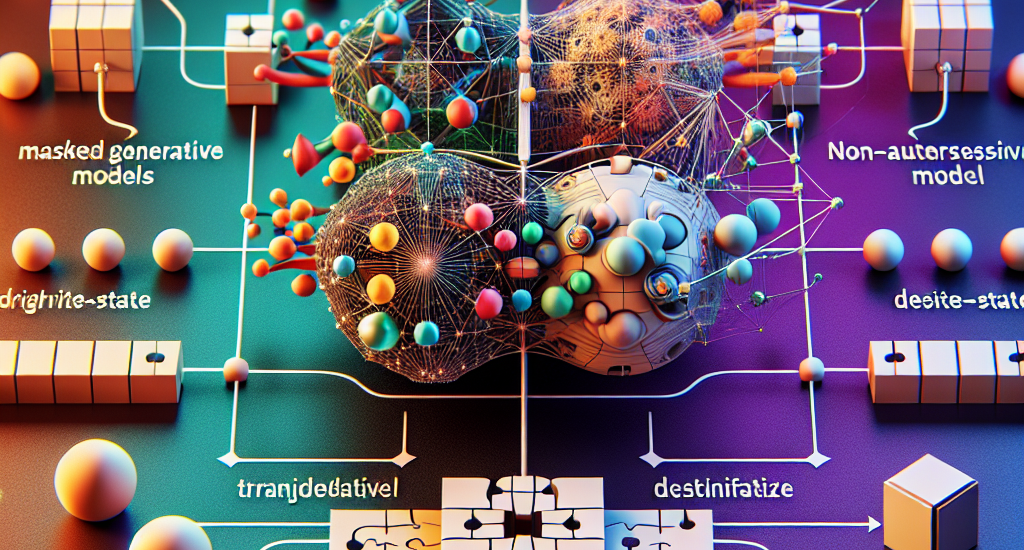

Abstract: In generative models, two paradigms have gained attraction in various

applications: next-set prediction-based Masked Generative Models and next-noise

prediction-based Non-Autoregressive Models, e.g., Diffusion Models. In this

work, we propose using discrete-state models to connect them and explore their

scalability in the vision domain. First, we conduct a step-by-step analysis in

a unified design space across two types of models including

timestep-independence, noise schedule, temperature, guidance strength, etc in a

scalable manner. Second, we re-cast typical discriminative tasks, e.g., image

segmentation, as an unmasking process from [MASK]tokens on a discrete-state

model. This enables us to perform various sampling processes, including

flexible conditional sampling by only training once to model the joint

distribution. All aforementioned explorations lead to our framework named

Discrete Interpolants, which enables us to achieve state-of-the-art or

competitive performance compared to previous discrete-state based methods in

various benchmarks, like ImageNet256, MS COCO, and video dataset FaceForensics.

In summary, by leveraging [MASK] in discrete-state models, we can bridge Masked

Generative and Non-autoregressive Diffusion models, as well as generative and

discriminative tasks.

Source: http://arxiv.org/abs/2412.06787v1