Authors: Vinayak Gupta, Yunze Man, Yu-Xiong Wang

Abstract: Recent advances in diffusion models have revolutionized 2D and 3D content

creation, yet generating photorealistic dynamic 4D scenes remains a significant

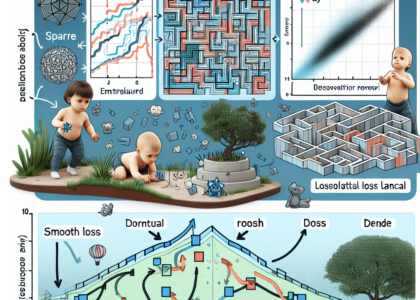

challenge. Existing dynamic 4D generation methods typically rely on distilling

knowledge from pre-trained 3D generative models, often fine-tuned on synthetic

object datasets. Consequently, the resulting scenes tend to be object-centric

and lack photorealism. While text-to-video models can generate more realistic

scenes with motion, they often struggle with spatial understanding and provide

limited control over camera viewpoints during rendering. To address these

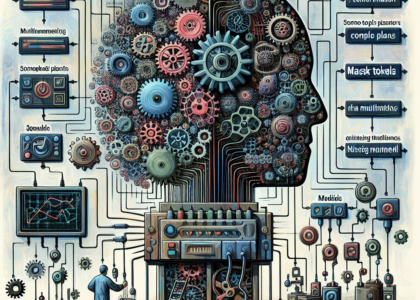

limitations, we present PaintScene4D, a novel text-to-4D scene generation

framework that departs from conventional multi-view generative models in favor

of a streamlined architecture that harnesses video generative models trained on

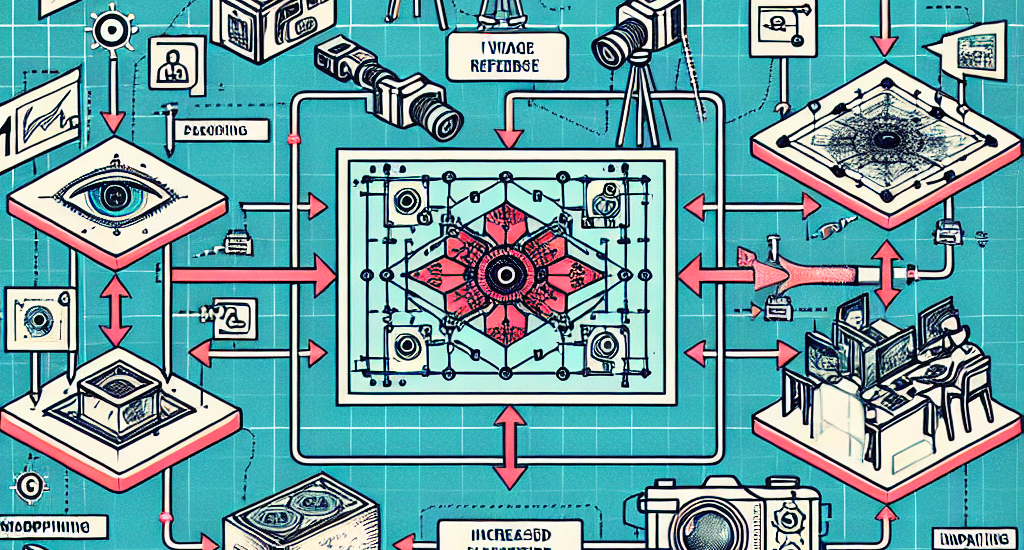

diverse real-world datasets. Our method first generates a reference video using

a video generation model, and then employs a strategic camera array selection

for rendering. We apply a progressive warping and inpainting technique to

ensure both spatial and temporal consistency across multiple viewpoints.

Finally, we optimize multi-view images using a dynamic renderer, enabling

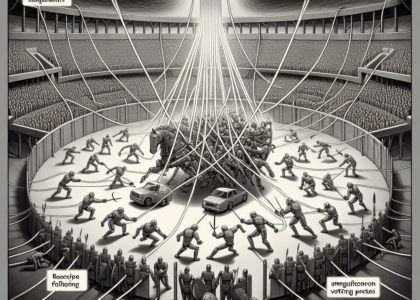

flexible camera control based on user preferences. Adopting a training-free

architecture, our PaintScene4D efficiently produces realistic 4D scenes that

can be viewed from arbitrary trajectories. The code will be made publicly

available. Our project page is at https://paintscene4d.github.io/

Source: http://arxiv.org/abs/2412.04471v1