Authors: Rui Xiao, Sanghwan Kim, Mariana-Iuliana Georgescu, Zeynep Akata, Stephan Alaniz

Abstract: CLIP has shown impressive results in aligning images and texts at scale.

However, its ability to capture detailed visual features remains limited

because CLIP matches images and texts at a global level. To address this issue,

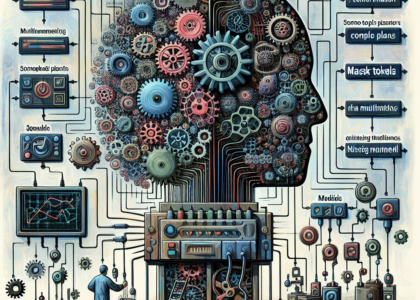

we propose FLAIR, Fine-grained Language-informed Image Representations, an

approach that utilizes long and detailed image descriptions to learn localized

image embeddings. By sampling diverse sub-captions that describe fine-grained

details about an image, we train our vision-language model to produce not only

global embeddings but also text-specific image representations. Our model

introduces text-conditioned attention pooling on top of local image tokens to

produce fine-grained image representations that excel at retrieving detailed

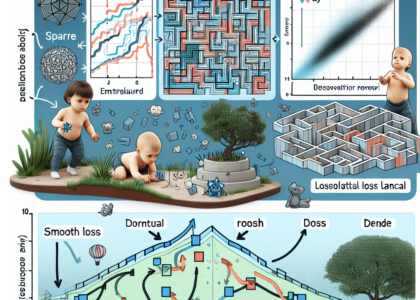

image content. We achieve state-of-the-art performance on both, existing

multimodal retrieval benchmarks, as well as, our newly introduced fine-grained

retrieval task which evaluates vision-language models’ ability to retrieve

partial image content. Furthermore, our experiments demonstrate the

effectiveness of FLAIR trained on 30M image-text pairs in capturing

fine-grained visual information, including zero-shot semantic segmentation,

outperforming models trained on billions of pairs. Code is available at

https://github.com/ExplainableML/flair .

Source: http://arxiv.org/abs/2412.03561v1