Authors: Lingteng Qiu, Shenhao Zhu, Qi Zuo, Xiaodong Gu, Yuan Dong, Junfei Zhang, Chao Xu, Zhe Li, Weihao Yuan, Liefeng Bo, Guanying Chen, Zilong Dong

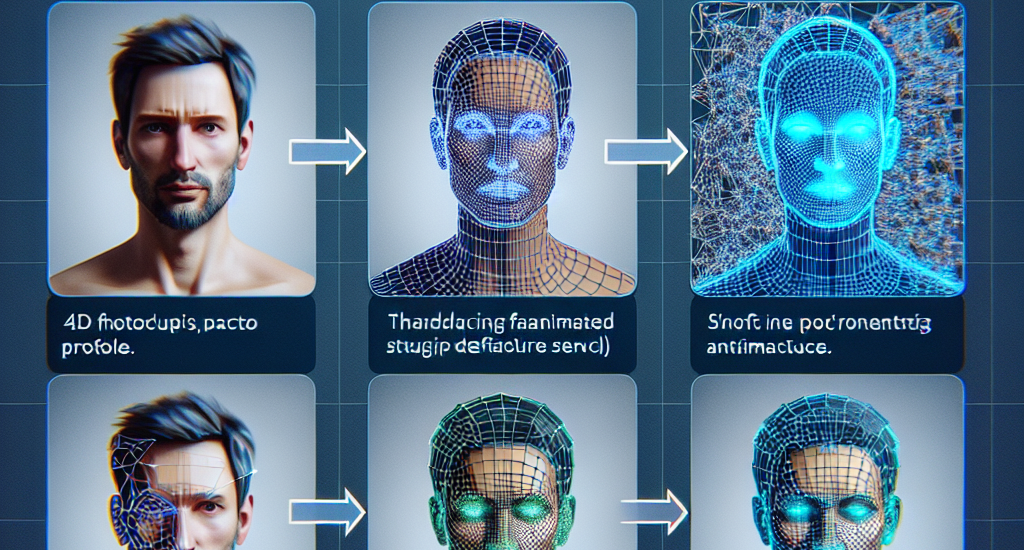

Abstract: Generating animatable human avatars from a single image is essential for

various digital human modeling applications. Existing 3D reconstruction methods

often struggle to capture fine details in animatable models, while generative

approaches for controllable animation, though avoiding explicit 3D modeling,

suffer from viewpoint inconsistencies in extreme poses and computational

inefficiencies. In this paper, we address these challenges by leveraging the

power of generative models to produce detailed multi-view canonical pose

images, which help resolve ambiguities in animatable human reconstruction. We

then propose a robust method for 3D reconstruction of inconsistent images,

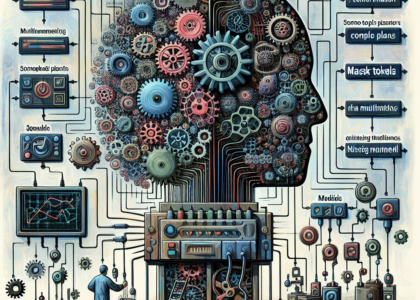

enabling real-time rendering during inference. Specifically, we adapt a

transformer-based video generation model to generate multi-view canonical pose

images and normal maps, pretraining on a large-scale video dataset to improve

generalization. To handle view inconsistencies, we recast the reconstruction

problem as a 4D task and introduce an efficient 3D modeling approach using 4D

Gaussian Splatting. Experiments demonstrate that our method achieves

photorealistic, real-time animation of 3D human avatars from in-the-wild

images, showcasing its effectiveness and generalization capability.

Source: http://arxiv.org/abs/2412.02684v1