Authors: Xuhao Hu, Dongrui Liu, Hao Li, Xuanjing Huang, Jing Shao

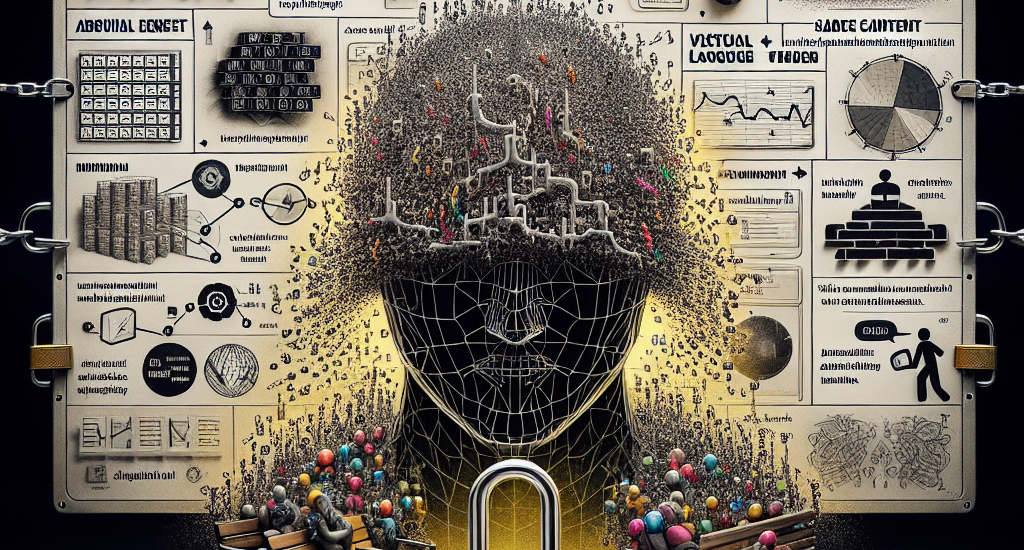

Abstract: Safety concerns of Multimodal large language models (MLLMs) have gradually

become an important problem in various applications. Surprisingly, previous

works indicate a counter-intuitive phenomenon that using textual unlearning to

align MLLMs achieves comparable safety performances with MLLMs trained with

image-text pairs. To explain such a counter-intuitive phenomenon, we discover a

visual safety information leakage (VSIL) problem in existing multimodal safety

benchmarks, i.e., the potentially risky and sensitive content in the image has

been revealed in the textual query. In this way, MLLMs can easily refuse these

sensitive text-image queries according to textual queries. However, image-text

pairs without VSIL are common in real-world scenarios and are overlooked by

existing multimodal safety benchmarks. To this end, we construct multimodal

visual leakless safety benchmark (VLSBench) preventing visual safety leakage

from image to textual query with 2.4k image-text pairs. Experimental results

indicate that VLSBench poses a significant challenge to both open-source and

close-source MLLMs, including LLaVA, Qwen2-VL, Llama3.2-Vision, and GPT-4o.

This study demonstrates that textual alignment is enough for multimodal safety

scenarios with VSIL, while multimodal alignment is a more promising solution

for multimodal safety scenarios without VSIL. Please see our code and data at:

http://hxhcreate.github.io/VLSBench

Source: http://arxiv.org/abs/2411.19939v1