Authors: Zhiqiang Shen, Ammar Sherif, Zeyuan Yin, Shitong Shao

Abstract: Recent advances in dataset distillation have led to solutions in two main

directions. The conventional batch-to-batch matching mechanism is ideal for

small-scale datasets and includes bi-level optimization methods on models and

syntheses, such as FRePo, RCIG, and RaT-BPTT, as well as other methods like

distribution matching, gradient matching, and weight trajectory matching.

Conversely, batch-to-global matching typifies decoupled methods, which are

particularly advantageous for large-scale datasets. This approach has garnered

substantial interest within the community, as seen in SRe$^2$L, G-VBSM, WMDD,

and CDA. A primary challenge with the second approach is the lack of diversity

among syntheses within each class since samples are optimized independently and

the same global supervision signals are reused across different synthetic

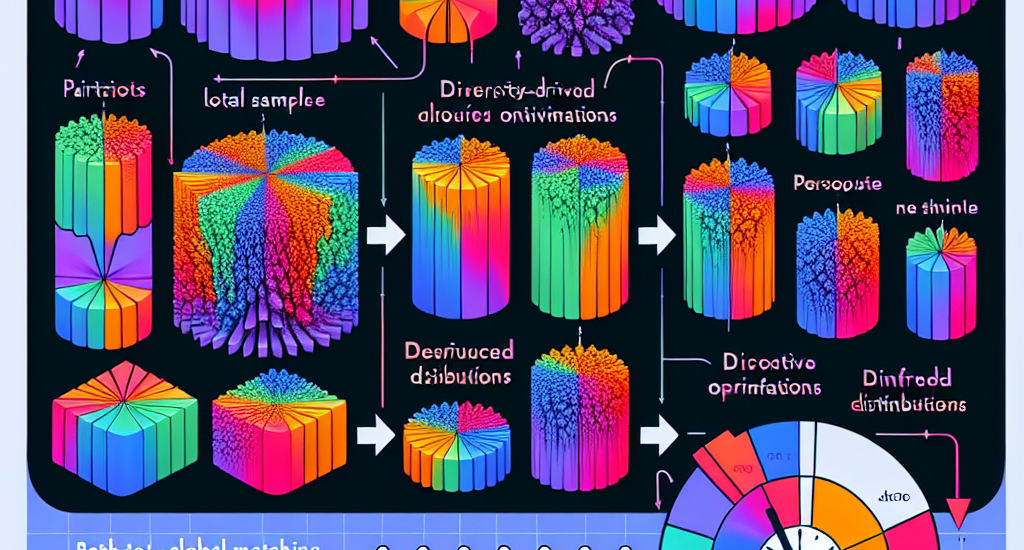

images. In this study, we propose a new Diversity-driven EarlyLate Training

(DELT) scheme to enhance the diversity of images in batch-to-global matching

with less computation. Our approach is conceptually simple yet effective, it

partitions predefined IPC samples into smaller subtasks and employs local

optimizations to distill each subset into distributions from distinct phases,

reducing the uniformity induced by the unified optimization process. These

distilled images from the subtasks demonstrate effective generalization when

applied to the entire task. We conduct extensive experiments on CIFAR,

Tiny-ImageNet, ImageNet-1K, and its sub-datasets. Our approach outperforms the

previous state-of-the-art by 2$\sim$5% on average across different datasets and

IPCs (images per class), increasing diversity per class by more than 5% while

reducing synthesis time by up to 39.3% for enhancing the training efficiency.

Code is available at: https://github.com/VILA-Lab/DELT.

Source: http://arxiv.org/abs/2411.19946v1