Authors: Lei Zhu, Xinjiang Wang, Wayne Zhang, Rynson W. H. Lau

Abstract: Convolutions (Convs) and multi-head self-attentions (MHSAs) are typically

considered alternatives to each other for building vision backbones. Although

some works try to integrate both, they apply the two operators simultaneously

at the finest pixel granularity. With Convs responsible for per-pixel feature

extraction already, the question is whether we still need to include the heavy

MHSAs at such a fine-grained level. In fact, this is the root cause of the

scalability issue w.r.t. the input resolution for vision transformers. To

address this important problem, we propose in this work to use MSHAs and Convs

in parallel \textbf{at different granularity levels} instead. Specifically, in

each layer, we use two different ways to represent an image: a fine-grained

regular grid and a coarse-grained set of semantic slots. We apply different

operations to these two representations: Convs to the grid for local features,

and MHSAs to the slots for global features. A pair of fully differentiable soft

clustering and dispatching modules is introduced to bridge the grid and set

representations, thus enabling local-global fusion. Through extensive

experiments on various vision tasks, we empirically verify the potential of the

proposed integration scheme, named \textit{GLMix}: by offloading the burden of

fine-grained features to light-weight Convs, it is sufficient to use MHSAs in a

few (e.g., 64) semantic slots to match the performance of recent

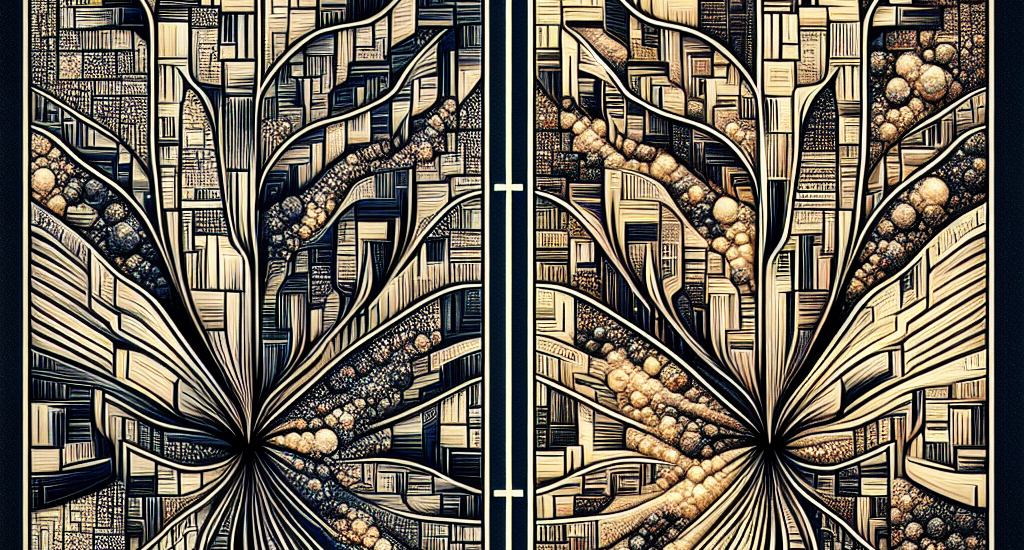

state-of-the-art backbones, while being more efficient. Our visualization

results also demonstrate that the soft clustering module produces a meaningful

semantic grouping effect with only IN1k classification supervision, which may

induce better interpretability and inspire new weakly-supervised semantic

segmentation approaches. Code will be available at

\url{https://github.com/rayleizhu/GLMix}.

Source: http://arxiv.org/abs/2411.14429v1