Authors: Yanke Song, Jonathan Lorraine, Weili Nie, Karsten Kreis, James Lucas

Abstract: Diffusion models achieve high-quality sample generation at the cost of a

lengthy multistep inference procedure. To overcome this, diffusion distillation

techniques produce student generators capable of matching or surpassing the

teacher in a single step. However, the student model’s inference speed is

limited by the size of the teacher architecture, preventing real-time

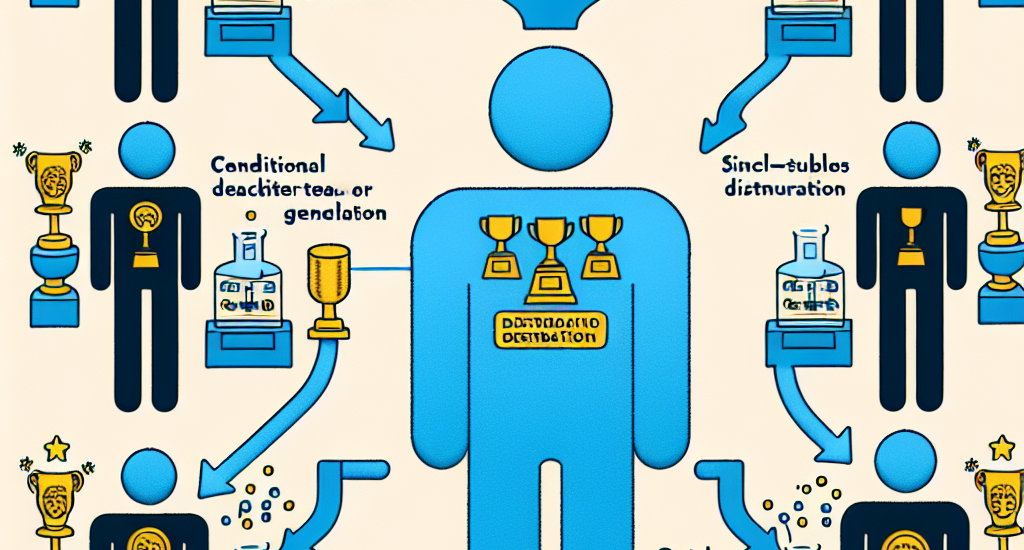

generation for computationally heavy applications. In this work, we introduce

Multi-Student Distillation (MSD), a framework to distill a conditional teacher

diffusion model into multiple single-step generators. Each student generator is

responsible for a subset of the conditioning data, thereby obtaining higher

generation quality for the same capacity. MSD trains multiple distilled

students, allowing smaller sizes and, therefore, faster inference. Also, MSD

offers a lightweight quality boost over single-student distillation with the

same architecture. We demonstrate MSD is effective by training multiple

same-sized or smaller students on single-step distillation using distribution

matching and adversarial distillation techniques. With smaller students, MSD

gets competitive results with faster inference for single-step generation.

Using 4 same-sized students, MSD sets a new state-of-the-art for one-step image

generation: FID 1.20 on ImageNet-64×64 and 8.20 on zero-shot COCO2014.

Source: http://arxiv.org/abs/2410.23274v1