Authors: Ayush Jain, Norio Kosaka, Xinhu Li, Kyung-Min Kim, Erdem Bıyık, Joseph J. Lim

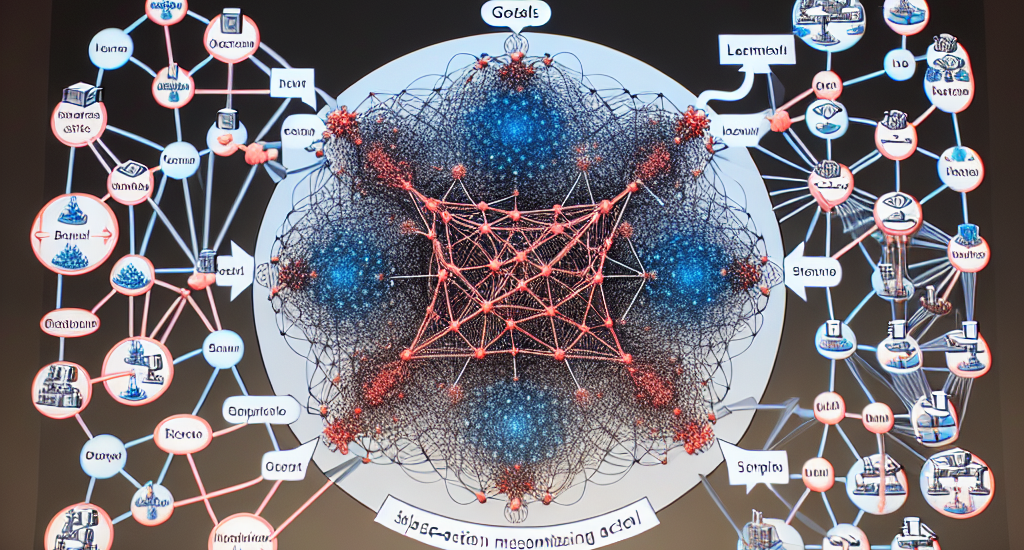

Abstract: In reinforcement learning, off-policy actor-critic approaches like DDPG and

TD3 are based on the deterministic policy gradient. Herein, the Q-function is

trained from off-policy environment data and the actor (policy) is trained to

maximize the Q-function via gradient ascent. We observe that in complex tasks

like dexterous manipulation and restricted locomotion, the Q-value is a complex

function of action, having several local optima or discontinuities. This poses

a challenge for gradient ascent to traverse and makes the actor prone to get

stuck at local optima. To address this, we introduce a new actor architecture

that combines two simple insights: (i) use multiple actors and evaluate the

Q-value maximizing action, and (ii) learn surrogates to the Q-function that are

simpler to optimize with gradient-based methods. We evaluate tasks such as

restricted locomotion, dexterous manipulation, and large discrete-action space

recommender systems and show that our actor finds optimal actions more

frequently and outperforms alternate actor architectures.

Source: http://arxiv.org/abs/2410.11833v1