Authors: Fei Tang, Yongliang Shen, Hang Zhang, Zeqi Tan, Wenqi Zhang, Guiyang Hou, Kaitao Song, Weiming Lu, Yueting Zhuang

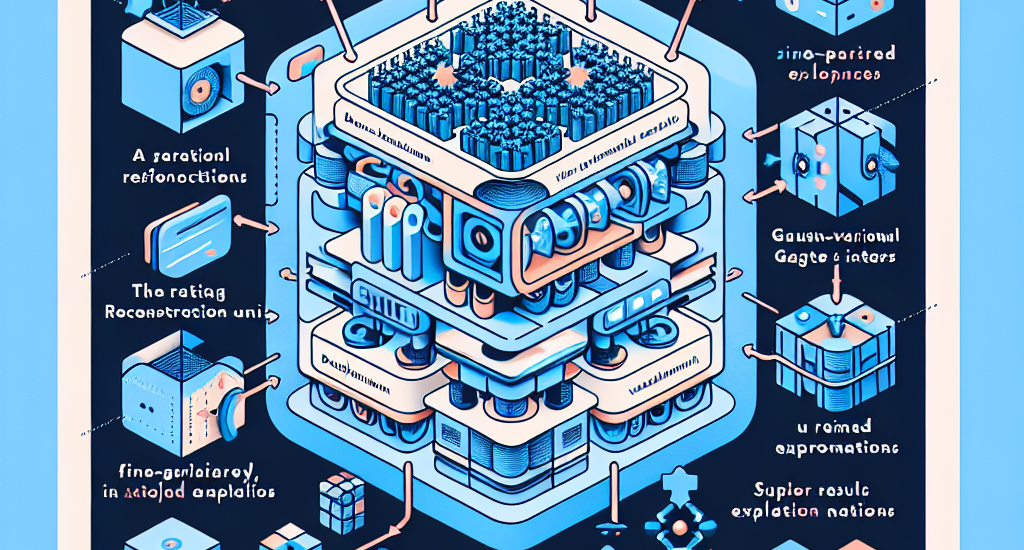

Abstract: Large language model-based explainable recommendation (LLM-based ER) systems

show promise in generating human-like explanations for recommendations.

However, they face challenges in modeling user-item collaborative preferences,

personalizing explanations, and handling sparse user-item interactions. To

address these issues, we propose GaVaMoE, a novel Gaussian-Variational Gated

Mixture of Experts framework for explainable recommendation. GaVaMoE introduces

two key components: (1) a rating reconstruction module that employs Variational

Autoencoder (VAE) with a Gaussian Mixture Model (GMM) to capture complex

user-item collaborative preferences, serving as a pre-trained multi-gating

mechanism; and (2) a set of fine-grained expert models coupled with the

multi-gating mechanism for generating highly personalized explanations. The VAE

component models latent factors in user-item interactions, while the GMM

clusters users with similar behaviors. Each cluster corresponds to a gate in

the multi-gating mechanism, routing user-item pairs to appropriate expert

models. This architecture enables GaVaMoE to generate tailored explanations for

specific user types and preferences, mitigating data sparsity by leveraging

user similarities. Extensive experiments on three real-world datasets

demonstrate that GaVaMoE significantly outperforms existing methods in

explanation quality, personalization, and consistency. Notably, GaVaMoE

exhibits robust performance in scenarios with sparse user-item interactions,

maintaining high-quality explanations even for users with limited historical

data.

Source: http://arxiv.org/abs/2410.11841v1