Authors: Shaowei Liu, Zhongzheng Ren, Saurabh Gupta, Shenlong Wang

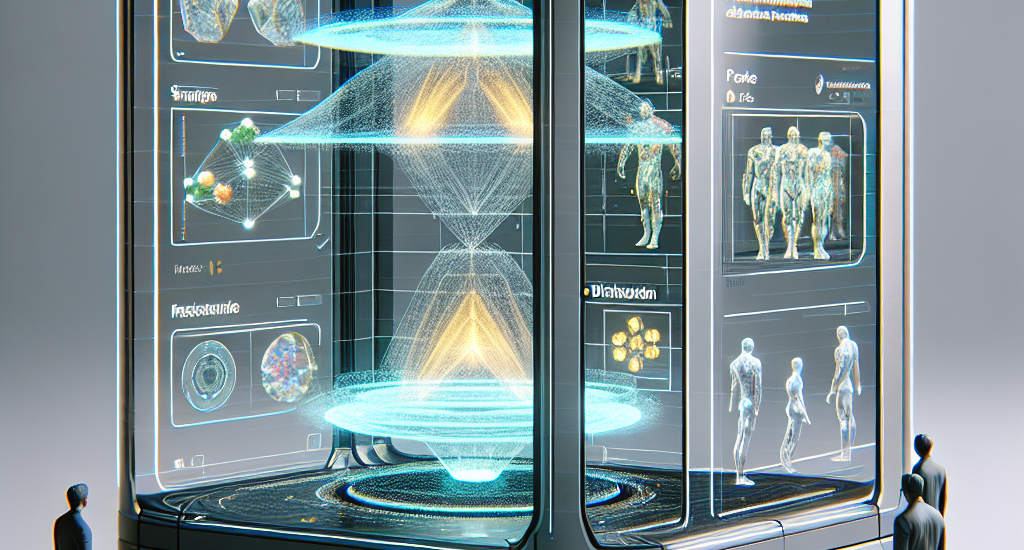

Abstract: We present PhysGen, a novel image-to-video generation method that converts a

single image and an input condition (e.g., force and torque applied to an

object in the image) to produce a realistic, physically plausible, and

temporally consistent video. Our key insight is to integrate model-based

physical simulation with a data-driven video generation process, enabling

plausible image-space dynamics. At the heart of our system are three core

components: (i) an image understanding module that effectively captures the

geometry, materials, and physical parameters of the image; (ii) an image-space

dynamics simulation model that utilizes rigid-body physics and inferred

parameters to simulate realistic behaviors; and (iii) an image-based rendering

and refinement module that leverages generative video diffusion to produce

realistic video footage featuring the simulated motion. The resulting videos

are realistic in both physics and appearance and are even precisely

controllable, showcasing superior results over existing data-driven

image-to-video generation works through quantitative comparison and

comprehensive user study. PhysGen’s resulting videos can be used for various

downstream applications, such as turning an image into a realistic animation or

allowing users to interact with the image and create various dynamics. Project

page: https://stevenlsw.github.io/physgen/

Source: http://arxiv.org/abs/2409.18964v1