Authors: Aeree Cho, Grace C. Kim, Alexander Karpekov, Alec Helbling, Zijie J. Wang, Seongmin Lee, Benjamin Hoover, Duen Horng Chau

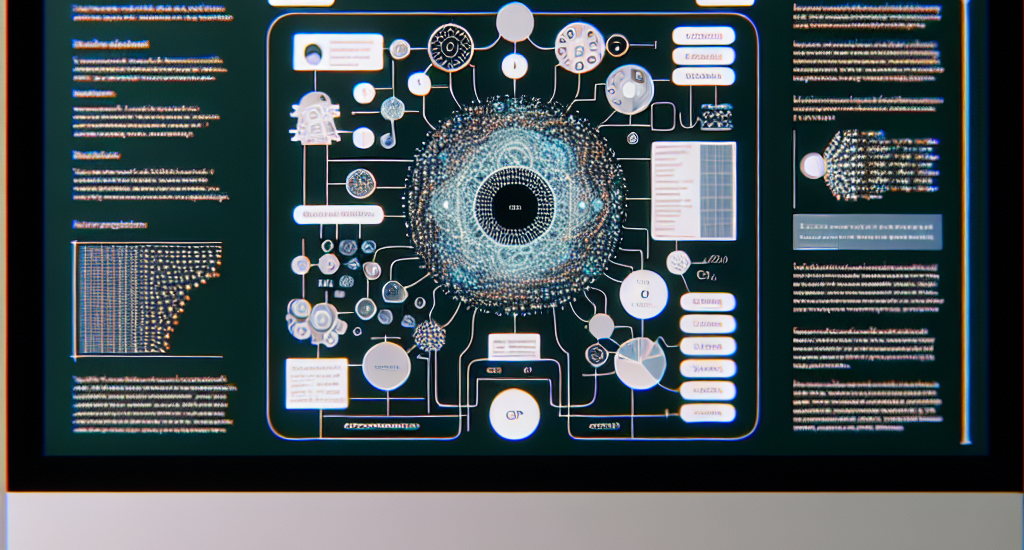

Abstract: Transformers have revolutionized machine learning, yet their inner workings

remain opaque to many. We present Transformer Explainer, an interactive

visualization tool designed for non-experts to learn about Transformers through

the GPT-2 model. Our tool helps users understand complex Transformer concepts

by integrating a model overview and enabling smooth transitions across

abstraction levels of mathematical operations and model structures. It runs a

live GPT-2 instance locally in the user’s browser, empowering users to

experiment with their own input and observe in real-time how the internal

components and parameters of the Transformer work together to predict the next

tokens. Our tool requires no installation or special hardware, broadening the

public’s education access to modern generative AI techniques. Our open-sourced

tool is available at https://poloclub.github.io/transformer-explainer/. A video

demo is available at https://youtu.be/ECR4oAwocjs.

Source: http://arxiv.org/abs/2408.04619v1